Unleashing the Power of AI: the KPMG Pioneering Approach to AI Security

Artificial intelligence (AI) has emerged as a transformative force across industries, reshaping business processes, enhancing decision-making, and driving unprecedented efficiencies. However, as AI systems grow in complexity and capability, regulating their reliability, security, and fairness becomes paramount. Organizations must elevate their teams as well as the tools enabling them to drive innovation that can endure evolving risks and regulations.

Governance & Trusted AI

Organizations looking to benefit from their investments in AI understand the criticality of creating justified trust in the systems. They must determine if these systems are worthy of trust through governance techniques that showcase how AI use cases align to the desired outcomes within the enterprise’s defined risk appetite, as well as establish programs to enhance employee trust in AI systems.

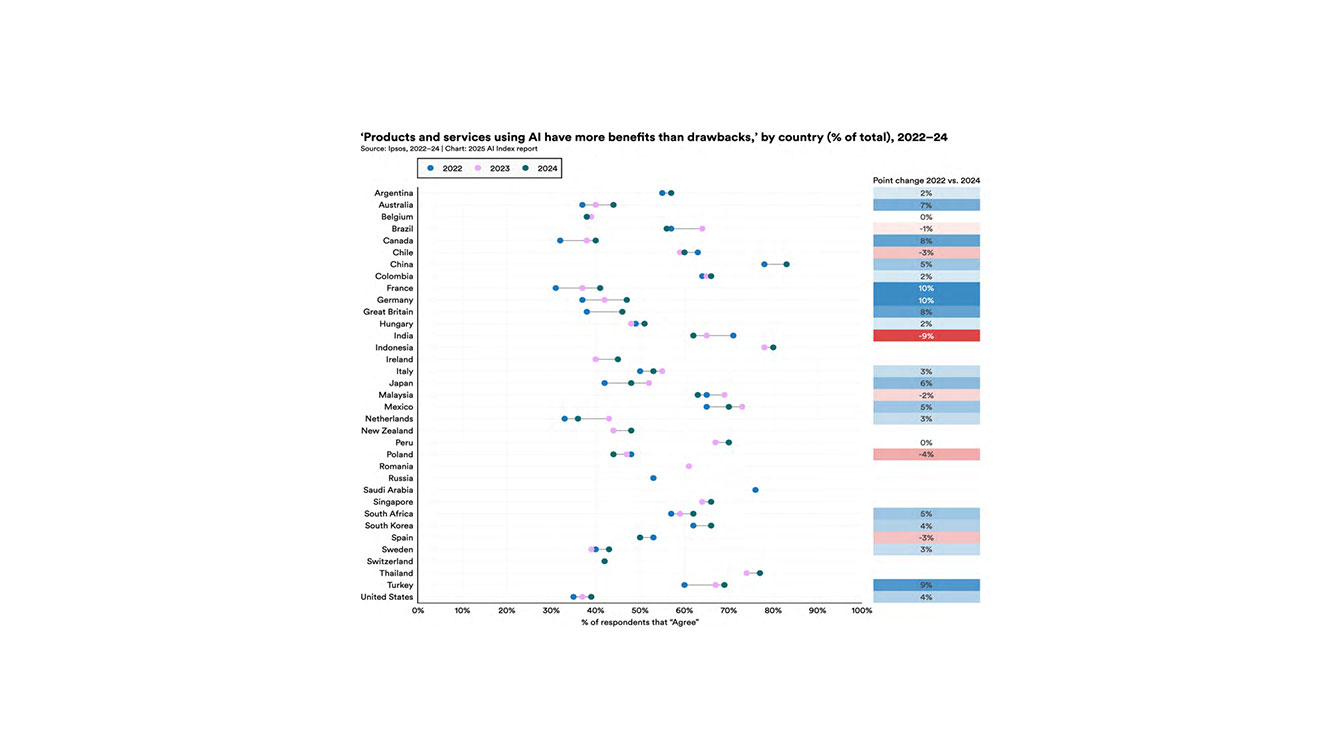

In the recent AI Index Report1 released by Stanford University, we see that globally there has been a cultural shift in terms of AI optimism over the last three years. Additionally, historically skeptical nations like Germany (+10%), France (+10%), Canada (+8%), Great Britain (+8%), and the US (+4%) are experiencing an increase in optimism around usage as AI adoption has become more visible and regulated.

This is further supported by a KPMG recent publication, Trust, Attitudes and Use of Artificial Intelligence: A Global Study 2025, where across respondents, KPMG found 54% of Americans accept or approve of AI today.

Governance and trusted AI are crucial for deploying use cases with safe, secure, and trustworthy practices. They also help organizations realize the full value of AI within their workforce, especially given the significant upfront investment needed to implement these solutions. This is true whether you're buying these solutions or building them in-house.

AI Security Testing

AI/ML models are still applications at their core, and are therefore susceptible to many traditional application vulnerabilities. What makes them unique, however, is the introduction of new vectors of attack that are not present with traditional, deterministic applications. These factors mean that AI/ML models have an even greater attack surface than traditional applications and highlight the critical importance of ongoing security testing throughout their lifecycle. A few types of attacks, both traditional and new, that AI models are susceptible to include:

1

2

This attack involves a malicious entity disguising prompts designed to cause harm as legitimate prompts, thus bypassing basic filters that are in place in most LLM’s and causing unexpected behaviors in the LLM. Organizations could as a result be left with undesirable outcomes such as (but not limited to) the system disclosing sensitive information to an unauthorized audience, revealing IP or restricted details related to the underlying AI system infrastructure or system prompts, or even arbitrary commands being executed in connected systems.

3

4

AI Agent hijacking: AI/ML models inherently have access to back-end API's and resources that many users will not have. An attacker may find ways to take advantage of this access of the agent to exploit a larger attack surface.

The attacker may leverage techniques such as command injection to trigger unauthorized actions by agents leveraging their existing permissions, embedding malicious tool codes in MCP to manipulate actions for a given task, or escalate privileges to override or intercept calls made to enterprise trusted tools.

Keeping in mind the unique nuance of AI/ML adversarial testing, it’s important for organizations to ensure their security organization is both getting involved at the appropriate stage gates across the AI lifecycle as well as providing adequate, frequent testing practices.

From delivering these projects across highly regulated and critical infrastructure clients, our teams have consistently recognized the importance for security teams to:

- Have an understanding and visibility of the existing enterprise AI use case intake process

- Be informed upfront in the initial evaluation of a use case to prepare for future risk assessment consultations or cybersecurity control implementations / activities

- Engage in control and risk mitigation planning discussions with the use case owner once controls are assigned based on the risk profile of the use case

- Support the implementation of security controls for the use case in collaboration with the use case owner

- These controls should take into account the context of the use case as its entire supply chain, including training data, model, and output

- Lead the regular AI red teaming / adversarial testing efforts and provide remediation plans to mitigate determined risk exposure

- Manage ongoing security monitoring for vulnerabilities and risks in the AI use case after deployment

- Ensure threats to AI/ML models are included in your cyber threat intelligence research and monitoring to remain up to date on mitigation techniques

It is critical for any robust use case that the security team is adequately engaged to evaluate and safeguard these systems.

Scaling through Technology Enablement

For those that have been on the journey of establishing a governance council and processes for the last several years, one thing has been clear from the start: manual processes should be thoroughly evaluated to support future technology-enabled processes.

The sheer volume of use cases has compelled organizations to reevaluate their technology stack, focusing both on leveraging AI within the organization, and supporting broader governance and security requirements in a sustainable way. This is especially important given the specialized skillset recognized as essential in today’s market.

It is critical for organizations to evaluate their existing platform ecosystem, particularly focusing on their security role within the governance structure. They should begin questioning whether their ecosystem adequately supports their security initiatives. Whether at the beginning of your AI journey or looking to validate your current path, a CISO’s team may ask:

- Do we have visibility into AI assets, both internal to the enterprise and from third parties? Is it manually recorded, or are there technology enabled discovery capabilities we can leverage?

- How are we documenting and monitoring the provenance of the data that informs our AI use case?

- How do we engage the right stakeholders for review and approval of these use cases?

- How are we incorporating threat modeling and red teaming into our AI lifecycle?

- Are we scanning models before they are deployed to production? Do we have any runtime scanning for post deployment?

- Are there opportunities to standardize and automate to ensure use cases are getting consistent coverage?

- Are our current logging and monitoring capabilities covering off on our AI / Agentic use cases to provide a comprehensive audit trail?

While there are several other questions your organization may prioritize, it is crucial to consider what is referred to by Gartner as AI Trust, Risk, and Security Management (AI TRiSM). It is also important to identify technologies that can further support your processes today. As a real-world example, KPMG LLP saw this need and incubated an AI TRiSM platform, Cranium, a CRN 2023 Stellar Start Up, which provides discovery, ongoing monitoring, and attestation as core competencies critical for supply chain visibility and security. This product helps our organization and others we serve add another layer of defense, which has been highlighted as one of the most critical foundational controls for mitigating risk (even in the era of Agentic AI as highlighted by Palo Alto Unit 42’s study of Agentic AI Threats, May 1st, 2025). Unique capabilities from a security platform such as Cranium also allow us to integrate these data points into our governance processes and inform other key enterprise wide Governance, Risk, and Compliance platforms in the lifecycle of AI governance.

Attestation in the Age of AI

It’s imperative for organizations not only to establish processes that truly enable governance and innovation, but also to ensure they can adequately represent their due diligence to external and internal stakeholders.

We are seeing an increased focus from internal audit functions on evaluating existing processes. Without an existing SOC2 or similar report for AI, organizations must adequately explain their governance actions and demonstrate adherence to Trusted AI principles, including security, privacy, and explainability, throughout the AI lifecycle.

We have also observed a growing focus from organizational leaders, such as the Institute of Internal Auditors, which on March 12, 2025, released a formal letter in response to a request for information from the US Office of Science and Technology Policy (OSTP), highlighting their key focus on providing transparency and accountability as a priority as the US looks to continue American leadership in AI.

This emphasis on transparency and accountability drives the development of risk-based scorecard views of AI posture, including security principles. This results in a System Card, which can be used to evaluate AI use cases and is tailored to the organization, allowing for consistent and repeatable reporting on the robustness of these use cases.

Conclusion/Call to action

As AI continues to revolutionize our businesses, assessing the trustworthiness and security of these systems is more critical than ever. KPMG offers a full suite of services designed to address the multifaceted risks associated with AI systems. Our approach includes establishing robust governance programs tailored to your organization's unique needs, implementing an AI Security Framework to safeguard your AI assets, and integrating leading technology to streamline and automate governance processes.

Additionally, we provide ongoing AI testing via managed services to assess the resilience and reliability of your systems. In the event of a security incident, our incident response and recovery services are on hand to mitigate impacts and restore normal operations, while our AI forensic services offer in-depth analysis to understand and prevent future incidents.

With KPMG as your trusted advisor, you can confidently navigate the evolving landscape of AI security and governance.

Our suite of services provides clients confidence that they will have a strategy across technology, process, and people. Having been recognized as #1 for Quality in AI Advice and Implementation, 1st for Authority in Risk, 1st in First Choice for Program Risk, and 1st in First Choice for Responding to Regulation by Source, KPMG is positioned at the forefront of delivering governance programs that unlock significant value for our clients in terms of both innovation and risk mitigation.

Insights by Topic Insights on cyber security

KPMG professionals are passionate and objective about cyber security. We’re always thinking, sharing and debating. Because when it comes to cyber security, we’re in it together.

Meet our team