Artificial Intelligence (AI) is reshaping the competitive landscape across all sectors of the economy, bringing significant business and societal benefits as well as emerging risks to people.

Research by the University of Queensland and KPMG shows that trust in AI is currently low in Australia. Concerns including privacy violations, unintended bias and inaccurate outcomes as well as recent high profile scandals have further reduced public trust in this technology.

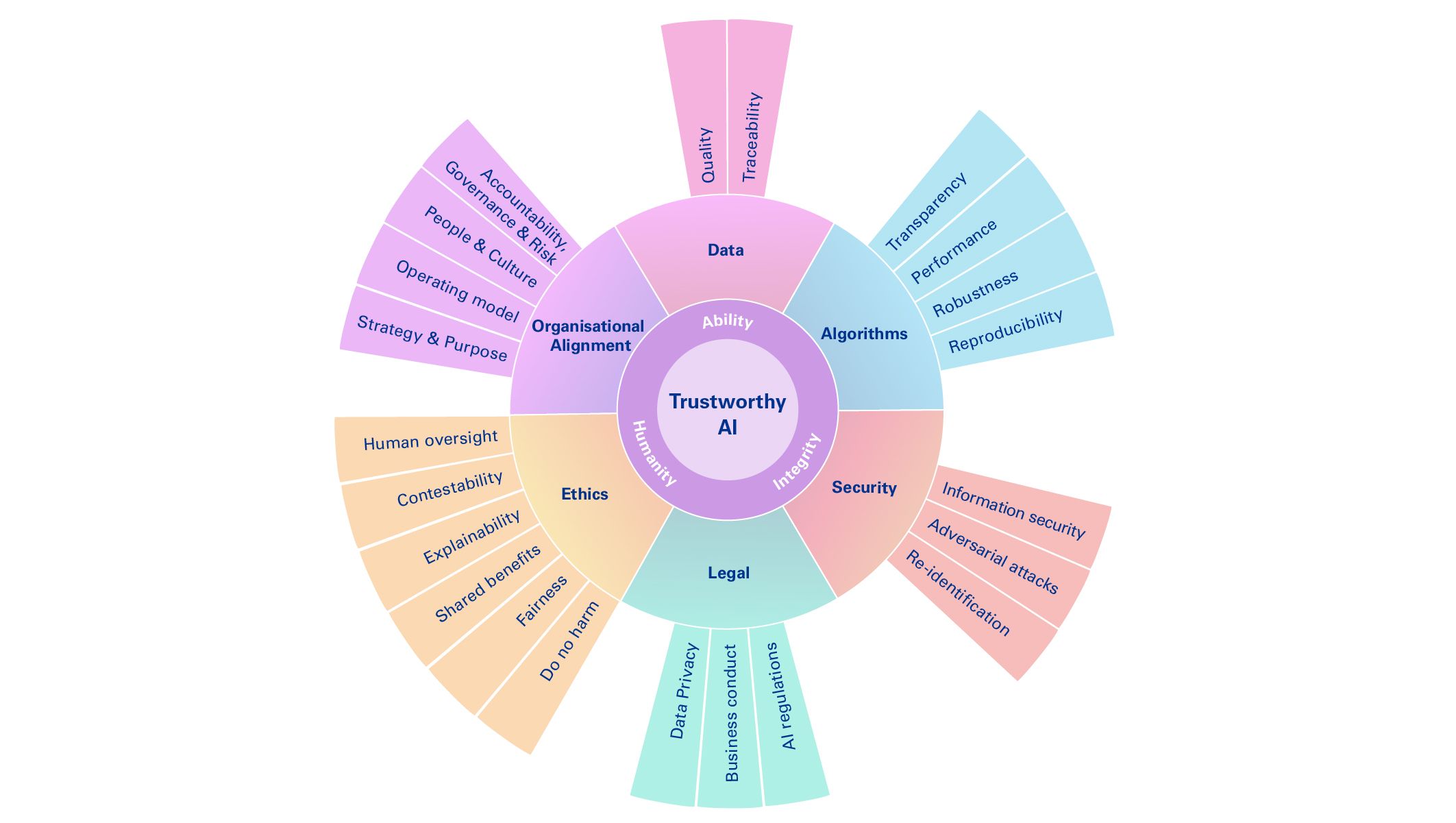

Trust underpins the acceptance and use of AI and requires a willingness to be vulnerable to AI systems, by sharing data or relying on automated AI decisions. This trust is built on positive expectations of the ability, humanity and integrity of the AI systems, and the people and organisations developing and deploying AI.

Many organisations are still at the early stages of maturity in establishing the ethical, technical and governance foundations to manage the risks and realise the opportunities associated with AI.

Now organisations and their leaders need to work towards achieving trustworthy AI. There is no silver bullet to this process. Organisations should tailor their approach to the benefits, challenges, risks and opportunities that are unique to their industry and context, and ensure it is proportional to the potential impacts that the AI systems pose to their stakeholders.

This is not something that a single executive, or business function, can do alone. It requires an organisation-wide approach that integrates and connects key functional areas of the organisation at each stage of the AI lifecycle

Presented in conjunction with The University of Queensland.

AI systems are fit-for-purpose and perform reliably to produce accurate output as intended.

AI systems are designed to achieve positive outcomes for end-users and other stakeholders and, at a minimum, do not cause harm or detract from human well-being.

AI systems adhere to commonly accepted ethical principles and values, uphold human rights, and comply with applicable laws and regulations.

Ability

Humanity

Integrity

Model of Trustworthy AI

For more detail, download the summary factsheet of our model to help design, manage and use trustworthy AI systems.

Explore the report

Navigate between the chapters below for a topic-focused summary.

At a glance

Chapter one summary: Aligning AI systems with the organisation

When used responsibly to support the organisation’s purpose and create value for stakeholders, AI can enhance trust by demonstrating ability, humanity and integrity.

Organisations must ensure that any AI system being designed, procured or implemented is aligned with the organisation’s overall strategy. They must consider how people, culture, governance and risk mechanisms support and reinforce trustworthy AI systems through their lifecycle.

Chapter two summary: Ensuring quality and traceable data

AI systems learn from their input and training data. If an AI system is built on incomplete, biased or otherwise flawed data, mistakes will likely be replicated – at scale – in its outputs.

Such trust failures can be prevented by following best practice in assessing the quality and traceability of the data used to build AI. This includes ensuring reliable, diverse and comprehensive datasets that are suitable for the intended purpose of the AI system.

Chapter three summary: Opening the algorithmic black box

Machine learning algorithmic models at the heart of an AI’s power to make predictions and decisions are one of the most complex components of an AI system.

The technical features of the algorithm should be documented and designed to enable understanding of how the end-to-end process works, and how it arrives at its outcomes. To minimise risks, detect errors and prevent bias, organisations must simultaneously assess the performance, robustness, reproducibility and transparency of algorithms.

Chapter four summary: Implementing security protocols and measures

Unlike conventional software solutions, intelligent algorithms at the heart of AI systems introduce vulnerabilities that require special consideration.

Stakeholders need to be confident that the integrity of the system will be kept at a high standard by the people within the organisation and protected from potential external malicious activities. Robust and clear information security and access protocols help increase confidence in protecting the confidentiality, integrity and availability of data.

Chapter five summary: Understanding the legal and conduct obligations

Understanding current and upcoming AI and data regulations can be challenging.

Leading organisations play a key role in driving trust in AI through the adoption of practices that proactively anticipate areas that will be in scope of upcoming AI regulations and address the limitations of the current legislative frameworks. Investing in targeted areas, raising the bar on data privacy practices and understanding the impact of AI on the conduct of business obligations are key.

Chapter six summary: Embedding ethics and human rights considerations

To be trusted, AI systems need to be consciously developed and implemented to align with six key ethical AI principles: do no harm, fairness, shared benefits, explainability, contestability and human oversight.

The ethical challenges and human rights risks posed by AI should be assessed carefully and embedded into the organisation’s governance and risk frameworks. Particular attention must be given to impacts on vulnerable stakeholders and populations, which are particularly damaging to stakeholder trust.

Achieving Trustworthy AI: A Model for Trustworthy Artificial Intelligence

This KPMG and University of Queensland report provides an integrative model for organisations looking to design and deploy trustworthy AI systems.

Download PDF (4.2MB)

Key contacts

Connect with us

- Find office locations kpmg.findOfficeLocations

- kpmg.emailUs

- Social media @ KPMG kpmg.socialMedia

- Request for proposal

Related insights

| Insights and services related to artificial intelligence, data and ethics |