How risk and compliance can accelerate generative AI adoption

Harness the power of generative AI in a trusted manner

Businesses are keen to capture the benefits of generative AI. Too often, however, risk concerns are stalling progress. A robust AI risk review process can help.

It’s been almost a year since generative AI exploded our understanding of what artificial intelligence could do for business. The emerging technology’s ability to consume and organize vast amounts of information, mimic human understanding, and generate content quickly created enormous expectations for a new spurt of technology-led productivity growth. In a KPMG survey of top executives, more than 70 percent of respondents said they expected to implement a generative AI solution by spring 2024 and more than 80 percent expect the technology to have “significant impact” on their businesses by the end of 2024.

But in many organizations, generative AI plans are stuck or progressing slowly as business units struggle to account for all the risks—security, privacy, reliability, ethical, regulatory, intellectual property, etc. This is where the risk function – risk, compliance, and legal teams – can step in and play a critical role. By developing and activating a process to quickly assess and control risks around generative AI models and data sets, risk teams will become an enabler for the business, rather than a speed bump that limits agility.

In this paper, we will look at how risk functions can help their organizations move forward in generative AI adoption by defining the scope and severity of the risks; aligning principles, processes, and people to manage them; and establishing a durable, practical framework for trusted AI governance. Our focus here is on generative AI, but Trusted AI and other governance practices that we discuss here also apply to how risk can support the organization’s wider AI agenda.

Dive into our thinking:

How risk and compliance can accelerate generative AI adoption

Download PDFGetting started: Classifying generative AI risks

While the level of risk awareness has risen, leaders also believe their risk professionals are prepared to deal with the risk challenges posed by generative AI. More than three-quarters of respondents in the June survey said they were highly confident in their organization’s ability to address and mitigate risks associated with generative AI. However, this is not the reality we see on the ground. We find that many organizations remain stuck, searching for leadership, consensus, and a rational approach for resolving generative AI risk issues and establishing guardrails for ongoing protection.

Model context and governance: Understanding AI model context and governance is paramount to any generative AI deployment. The context defines the purpose, scope, and ethical framework within which the generative AI model operates. It also defines the required training data and its sources, model architecture, and determines applicable risks such as security, fairness, and sustainability. Governance provides the structure to manage and oversee the model's development and usage.

Input data: The quality, relevance, and fairness of input data directly impact the model's effectiveness and ethical hygiene. Using high-quality and diverse data sets in training and fine-tuning generative AI models also can help reduce or prevent hallucinations. In accordance with the defined context, organizations need to ensure that the data sources and pipelines used by generative AI models for training, validation, and inference are free from biases and that they are trustworthy.

Output data: Generative AI can produce dazzling results—well-argued legal briefs, insightful reports, and in-depth analyses. However, before organizations trust these generative AI outputs, they need to ensure that they have robust quality assurance practices—both manual and automated—in place to check and fix results. The quality and completeness of input data, the model logic, and infrastructure effect the outputs of a generative AI model. Without the right measures in place, outputs could be biased, unethical, irrelevant, incoherent, expose sensitive data, or have legal and safety consequences.

Model logic and infrastructure: The model logic defines how the model operates, processes data, and generates responses or outputs. Will the AI application only look for absolute matches in the data provided in generating responses? Or will the AI application be able to “guess” the response? Or will the model access information from the internet? Answers to these questions will be driven by the context of the application and setup of the model logic leading to a direct influence on the outcomes and decisions of a generative AI model.

The infrastructure provides the necessary computational resources and environment for execution, underpinning the model’s functionality and scalability. Understanding and selecting the right infrastructure can help organizations choose cost-effective hardware and software resources, while meeting performance, reliability, flexibility, and scalability goals. Careful infrastructure design also enables organizations to future-proof their environment.

A rising risk awareness

Generative AI presents an array of multi-layered and multi-disciplinary risk issues—in some cases introducing pure black-box unknowns. These challenges will require new depth, expertise, and leadership from the risk function.

Corporate leaders are well aware of generative AI’s operational, regulatory, and reputational risks and they are clearly looking to the risk team to manage them. In an April KPMG survey of top leaders, 40 percent of respondents said legal and copyright issues were a chief risk concern. In a second survey in June, 61 percent said these were top concerns, a greater than 50 percent increase. There were similar increases in concerns over misinformation, inaccuracies, and weaponization of generative AI content.

How risk can enable Generative AI adoption

Business leaders are looking to generative AI to increase efficiency and enable new sources of growth. In a KPMG survey, top leaders cited competitive pressures as a chief reason to adopt generative AI in operations. They also expect generative AI to open new possibilities in sales, marketing, and product development. And they are impatient to get going so they don’t fall behind.

Working with other functions—IT, cyber, finance, HR, etc.—risk can help shorten the path to generative AI implementation. We have identified four key risk areas that need to be addressed before any implementation proceeds. These are just some of the elements of the larger AI risk program that will provide ongoing oversight.

To expedite reviews and approvals on these risk topics, companies can set up a multi-disciplinary generative AI taskforce. When a business unit or function proposes a generative AI implementation, finance should assess its potential payoff and strategic priority, while IT will need to determine how it can be implemented (including choosing vendors/partners/supporting LLM). Then risk should swing into action, using a streamlined repeatable governance and review processes that should include collaboration with legal, compliance and cybersecurity departments.

Build a Trusted AI framework

Organizations that are planning to use generative AI in their business must understand the importance and strategic imperative of ensuring that all AI applications, including those using generative AI, are trustworthy and responsible. Reputations are at stake, and without governance in place to ensure the technology is operating ethically and reliably, businesses risk damaging their relationships with customers, employees, partners, and the market.

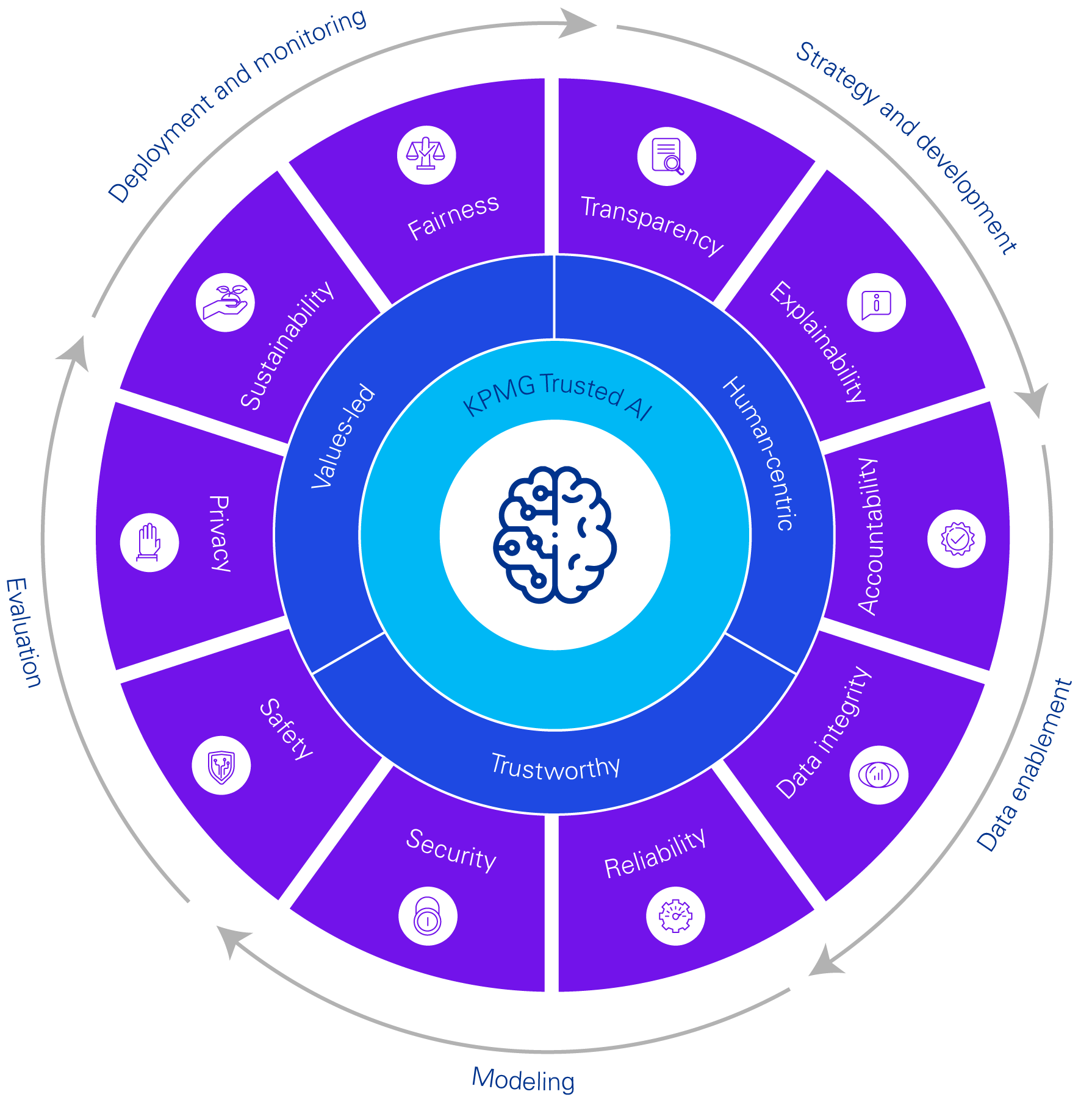

Creating trustworthy and ethical AI is a complex business, regulatory, and technical challenge. KMPG has developed a Trusted AI framework that stresses fairness, explainability, transparency, accountability, security, privacy, safety, data integrity, sustainability, and reliability.

The KPMG Trusted framework can be used across AI activities—establishing best practices for machine learning and large language model teams and defining data quality standards. The Trusted AI framework provides an ethical and responsible “north star” for organizations as they build and expand their generative AI programs, helping organizations to manage the risks associated with AI without hindering or blocking deployments. By crafting processes and policies that bring Trusted AI to life throughout the entire deployment lifecycle, these principles become part of the organizational DNA.

The central challenge in AI risk management lies in the dynamic nature of each AI application, which comes with its own business processes, impact, and risks. However, a trusted AI framework can be a solid foundation that is adaptable to any generative AI application. Defining the framework is only the start: risk functions should also ensure that the framework is operationalized and adopted across the organization. Adoption and operationalization hinges on a framework that is not too theoretical and does not overly burden the business. KPMG, for example, uses commercially available software products to automate aspects of AI risk management and governance for itself and its clients.

Here are some of the questions the board should be discussing with risk:

- Is our governance process agile enough to ensure that all AI risks are identified, managed, and mitigated in a timely way?

- Are stakeholders in business and IT satisfied with the risk review process?

- Are existing risk metrics aligned to AI risks?

- Do we have a model risk management group for all generative AI implementations?

- Are controls appropriate for each stage of the generative AI lifecycle and commensurate to different risk levels?

- Do automated workflows maintain and enhance control postures?

- Does our organization provide a safe zone for development?

- Is experimentation appropriately supported with access to training and data for use cases?

- Are we adequately monitoring and measuring risks post-deployment?

- Can we scale up generative AI solutions effectively while still managing risk?

Among the most important tasks that risk can take up now is assisting in the design, operation, and optimization of large language models used in generative AI and monitoring data quality efforts. This is an opportunity to revisit data production and governance practices since the success of generative AI adoption depends on the quality of data feeding the model.

How KPMG can help

At KPMG, we bring extensive industry expertise, cutting-edge technical skills, and innovative solutions to every generative AI project. With our strong partner network, we empower business leaders to leverage the full potential of generative AI in a secure and reliable way. From developing initial strategies and designs to managing ongoing operations, we support our clients throughout the entire AI lifecycle. Our comprehensive risk management services include rapid assessments of existing generative AI frameworks, benchmarking analysis, and implementing a robust governance process from intake to production.

Our Trusted AI framework helps ensure that AI implementation and usage are ethical, trustworthy, and responsible, based on these principles:

We will implement AI guided by our values. They are our differentiator at KPMG and shape a culture that is open and inclusive and that operates to the highest ethical standards. Our values inform our day-to-day behaviors and help us navigate emerging opportunities and challenges. We take a purpose-led approach that empowers positive change for our clients, our people, and our communities.

We will prioritize human impact as we deploy AI and recognize the needs of our people and clients. We are embracing AI to empower and augment human capabilities — to unleash creativity and improve productivity in a way that allows people to reimagine how they spend their days.

We will adhere to our framework across the AI lifecycle and its ten pillars that guide how and why we use AI. We will strive to ensure our data acquisition, governance, and usage practices uphold ethical standards and comply with applicable privacy and data protection regulations and confidentiality arrangements.

The flip side of generative AI:

Challenges and risks around responsible use

KPMG ranks #1 for quality AI advice and implementation in the US

According to senior buyers of consulting services who participated in the Source study, Perceptions of Consulting in the US in 2024, KPMG ranked No. 1 for quality in AI advice and implementation services.

Explore more

An Illustrative AI Risk and Controls Guide

Start designing practical controls to manage your organization's AI risks.

Trusted AI: Powering life sciences breakthroughs

Explore AI's impact on life sciences and learn to balance innovation with ethics for responsible AI adoption in our latest KPMG paper.

An executive’s guide to establishing an AI Center of Excellence

How to develop a dedicated group within your business to help bring AI and automation initiatives to fruition.

Transforming internal audits through the power of AI

AI and data analytics are reshaping internal audits and requiring development of new strategies for effective adoption and integration.

Governing AI responsibly

Discover how risk professionals can develop an effective AI governance model

Internal audit’s role in AI transformation

Discover the potential that AI can shape the future of internal audit

Where will AI/GenAI regulations go?

Demonstrating 'trusted AI systems'

Meet our team