Trusted AI & Systems

- Core Principles for Trusted AI

- Multifaceted Approach to Risk Management

- AI Risk Challenges

- Actions

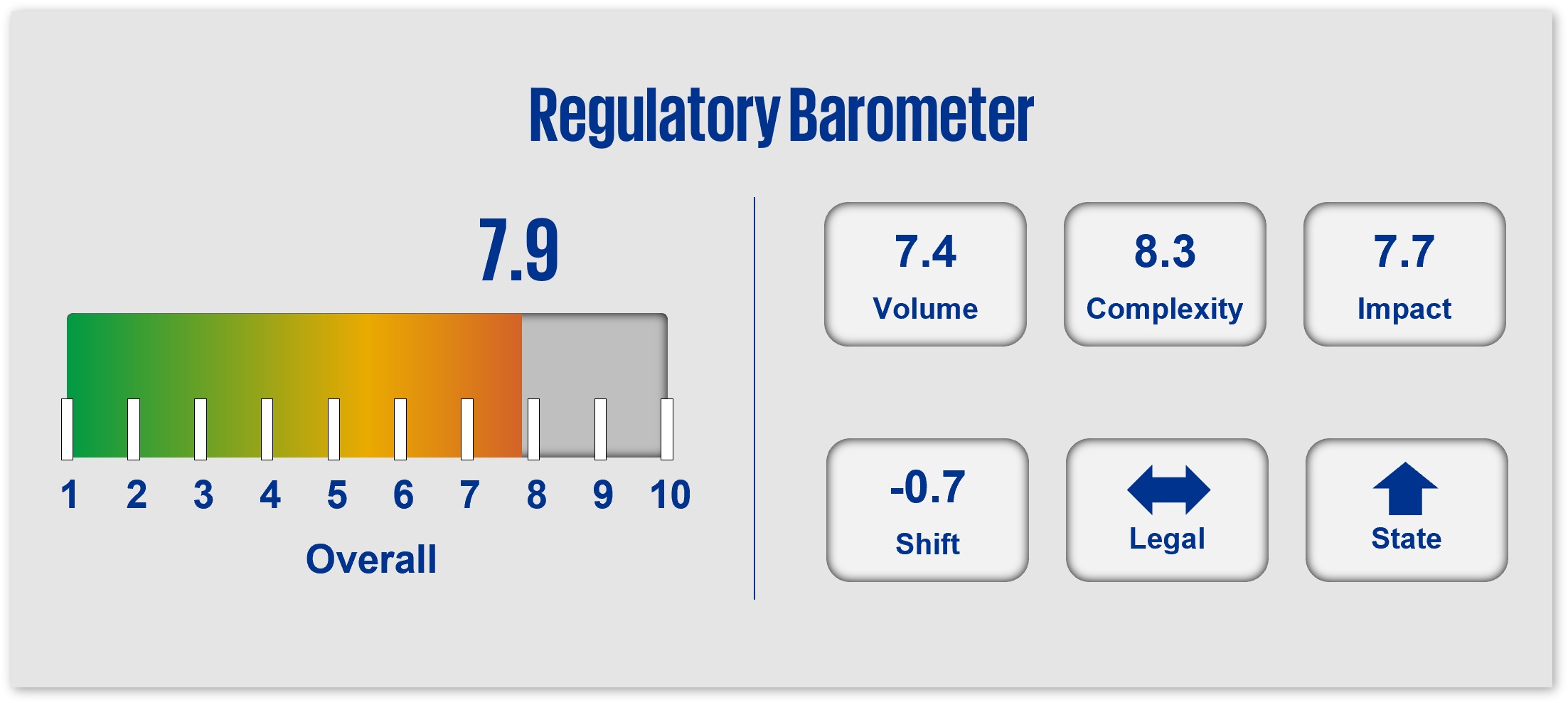

In 2025, anticipate repeal of the current AI Executive Order and the establishment of a new AI Executive Order focused on prioritizing AI innovation and growth across all agencies. Expect continued application of existing regulations and frameworks to AI and systems alongside a push toward “non-regulatory approaches” such as industry/sector-specific policy guidance and the use voluntary frameworks and standards (such as the NIST AI Risk Management Framework), and test/pilot programs. The Administration and regulators will continue to focus on the interplay between trusted systems and potential cybersecurity, privacy and national security risks as well as increase their focus on the nexus between AI policy and energy policy and lessen the focus on potential “AI harms”. Expect ongoing expansion of state bills/laws and legal challenges to serve as precedent for new policies and/or rulemakings; the significant volume of AI-related state activity will likely pressure Congress and the Administration to establish a federal AI policy framework.

1. Core Principles for Trusted AI

U.S. efforts to regulate AI and systems/technologies continue to evolve largely through guidance, laws/ regulations, and enforcement to address potential consumer harm. The regulatory focus will continue to align on core principles, though may be nuanced for specific agency and state focal areas.

These core principles include:

Fairness

AI and systems are deployed in a manner that:

- Mitigates the risk of bias, conflicts of interest, and other consumer harm.

- Incentivizes fair competition (e.g., interoperability and choice).

Explainability & Accountability

Developers, deployers, and acquirers are responsible for clearly demonstrating:

- Understanding of system inputs, applications, and outcomes.

- Clear disclosure providing stakeholders with an understanding of each AI/system, and evidence supporting the accuracy of claims (e.g., prevent “AI-washing”).

- Efforts to monitor/mitigate risks around synthetic content, including AI-generated “deepfakes” (e.g., authentication, “watermarking”).

- Human accountability at all levels of decision-making.

Risk Management

A risk management framework covering the full AI lifecycle (design, development, use, and deployment) and requiring:

- Governance policies and controls.

- Validation independent of design and development.

- Policies and practices to ensure “safe” design and implementation including safeguards against harm to people, businesses, and property and consistency with the intended purpose.

Security and Reliability

Safeguards to reinforce the reliability of AI and systems against potential risks or disruptions through:

- Testing and validation prior to public release and ongoing thereafter to assure AI and systems operate in accordance with their intended purposes and scope.

- Protections/controls against manipulation and unintended use (e.g., adversarial attack, data poisoning, insider threat.)

Data Privacy

Collection and use of consumer data comply with applicable data privacy and protection laws and regulations, and incorporate features to limit:

- Use of data to specific and/or explicit purposes, subject to permission, consent, opt-in/out and/or authorization, as required.

- Access to data and systems.

- Retention of data for only as long as needed.

Data Integrity

Data is assessed/tested for accuracy/quality, completeness, consistency, appropriateness, and validity prior to use and ongoing as part of the design and application of technological tools, promoting trust in the AI decisioning.

2. A Multifaceted Approach to Risk Management

With the core principles as a base, federal agencies will continue to apply existing and new guidance, regulations, and frameworks toward managing the risks related to AI and systems. Multiple public-private initiatives are underway to inform (through information sharing, testing, transparency) the understanding of, and promote innovation in, AI model development and related regulatory guardrails. Related state activity is also gaining momentum at both the legislative and regulatory levels.

The focus on risk management will cover the full AI lifecycle and include:

Frameworks

Cross-agency evaluation of risk management practices under:

- Existing laws, regulations and frameworks (e.g., consumer and employee protection laws and regulations, model and third-party risk management guidance).

- New, evolving, and anticipated frameworks, standards, and regulations (e.g., NIST’s AI and Cybersecurity Risk Management Frameworks, ISO 42001, application of TCPA to AI-generated voice).

Governance

Cross-agency focus on robust, and effective governance practices, including:

- Understanding the inter-relationships among the core principles, changing societal dynamics and human behaviors, and AI risks.

- Implementing practices/parameters for development, implementation, and use (e.g., clear statement of purpose; sound design, theory, and logic).

- Testing and validation of systems and risks, including third parties.

- Promoting transparency (e.g., what data is used, how data is used, impact assessments) and accountability (e.g., claims, ethical application).

Purpose Limitation & Data Minimization

Driven by the proliferation of available consumer data, the volume of data needed to train AI models and systems, and the increasing number of applications of AI and systems, regulatory attentions and enforcement will focus on:

- Compliance with data privacy laws and regulations, including protections against disclosure of sensitive data including biometric, health, location, and personally identifiable information.

- Responsiveness to consumer data requests (e.g., corrections/revisions, consent, opt in/out, authorization(s), deletion).

- Protections against bias, including data enrichment, as well as protections against adverse threats such as cybersecurity breaches, data poisoning, and misuse of the model/data.

- Limitations, including collection, access, and use as well as permission(s), consent, opt in/out, and/or authorization.

- Retention, safeguards, and disposal practices (e.g., disposal of devices/ assets containing customer data).

Continual Improvement

Regulators will expect companies to demonstrate continual improvement of the risk governance/management/controls framework. Better practices are expected to evolve based on public/private information sharing (within and across organizations as well as across regulators) especially in areas such as risk management, decision making processes, responsibilities, common pitfalls, and TEVV (testing, evaluation, validation, verification).

3. AI Risk Challenges

The implementation of AI and systems is marked by complexity due to the speed of technological advancements, evolving standards, and the need for effective change management. Regulatory discord and legal challenges at the federal, state, and global levels may exacerbate these complexities.

Speed

The rapid pace of AI system development and deployment, both in-house and through third parties, requires agility in adapting to new applications of existing laws/regulations, evolving standards, and new requirements.

Transparency

Legislators and regulators are looking to impose guardrails that broadly will protect consumers, financial stability, and national security from potential misuse of AI and systems. Through laws and regulations, they are looking to hold model developers, deployers, companies, boards and managements accountable for AI and system applications and outputs, placing importance on the ability to explain, and disclose as required, the:

- Goals, functionality, safety, and potential impacts to both internal and external stakeholders.

- Identification, assessment, and mitigation of risks.

- Accuracy, clarity, and consistency, as well as supporting evidence for claims made and associated marketing.

Divergence

Even when aligned on the core principles, diverging regulatory frameworks and expectations across federal, state, and/or global jurisdictions or by industry or geography, could greatly expand the complexity of both risk and compliance challenges, and necessitate a reassessment of current and target state compliance functions/approaches to compliance risk assessments. Divergences are likely to develop when:

- Laws or regulatory and supervisory frameworks have a multi-jurisdictional reach/application.

- The outcomes of legal challenges inhibit or encourage rulemaking by setting a new precedent.

4. Actions

- Establish and maintain a governance framework: Implement tools and technology to support and operationalize a scalable governance framework that guides the design, use, and deployment of automated systems ensuring adherence to ethical standards, regulatory requirements, and best practices.

- Conduct pre-deployment testing and ongoing monitoring: Perform thorough pre-deployment testing, risk identification, and mitigation for automated systems to ensure their safety and effectiveness. Conduct runs in parallel with existing processes and have demonstrable uplift from a regulatory perspective (e.g., decrease in false positives) before full deployment. Stay up to date on regulatory developments; implement continuous monitoring and evaluation practices to identify potential issues, biases, and undesirable outcomes in a system’s performance; and adjust accordingly.

- Promote transparency and accountability: Foster a culture of transparency and accountability within the organization, clearly communicating the goals, functionality, and potential impacts of automated systems to both internal and external stakeholders.

- Implement effective MRM: Adopt a robust MRM framework to ensure models are reliable, accurate, and unbiased. Conduct regular validation, testing, and monitoring of the models, and timely address any identified issues to minimize adverse impact on investors and comply with regulations. Provide transparency regarding model performance and risk exposure to the board and management.

- Provide human alternatives and remediation: Offer human alternatives and fallback options for customers who wish to opt out from using automated systems, where appropriate. Establish mechanisms for customers to report errors, contest unfavorable decisions, and request remediation, demonstrating the organization’s commitment to fairness and responsible use of technology.

- Understand system strategy and roadmap: Align the organization’s vision, strategy, and operating model for system solutions with their broader goals. Assess the board-level oversight and maintain an inventory of the system landscape within your organization. Monitor third-party risks associated with data protection, storage, and access to confidential data, and evaluate software tools acquired to maintain data security and privacy.

- Adapt to the speed of AI development: Adopt a dynamic approach to new applications of existing laws/regulations, evolving standards, and new requirements by implementing streamlined processes for development, testing, and validation; robust training programs; arrangements to leverage external third-party expertise and technology; tailor strategies to meet unique demands and regulatory requirements across industries and geographies.

Dive into our thinking:

Get the latest from KPMG Regulatory Insights

KPMG Regulatory Insights is the thought leader hub for timely insight on risk and regulatory developments.

Explore more

Meet our team