This article was first published in the Q1 2024 issue of the SID Directors Bulletin published by the Singapore Institute of Directors.

Although generative artificial intelligence (AI) is not a new form of technology, it has seen groundbreaking advancements in recent years. Traditionally, some of the earliest use cases of AI were to analyse large amounts of data. However, the technology has now evolved into a tool that can be used to fulfil specific requirements from humans with amazing speed and accuracy.

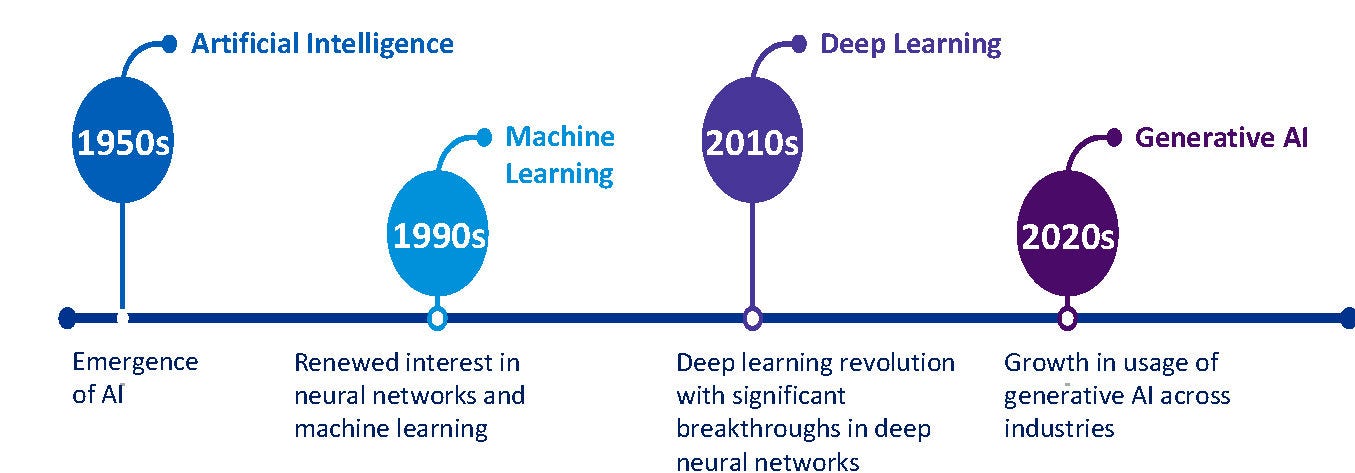

Since its inception in the 1950s, artificial intelligence has been continuously evolving. A milestone in the potential for this technology was when an AI-generated image won an art contest in mid-2022. Most recently, chatbots based on large language models (LLMs) and text-toimage models have attracted much interest and resulted in further discourse on the potential of generative AI. (See box, “The Evolution of AI”).

The evolution of AI

Source: Q1 2024 issue of the SID Directors Bulletin published by the Singapore Institute of Directors

Driven by the potential of generative AI, many companies have announced significant investments into developing generative AI models to capitalise on the opportunities presented in the industry. However, there have been concerns over its rapid rise. Some stakeholders have called for more regulation on the use of generative AIs and even restrictions on the scope of its use.

Risks and considerations of using AI

Despite the benefits that generative AI can bring, there have also been concerns surrounding the use of this technology. For example, there have been concerns over the confidentiality of information fed to the AI system, as well as the accuracy of information produced by AI. When it comes to corporate culture, the use of AI will also need to be aligned with the company’s culture and values.

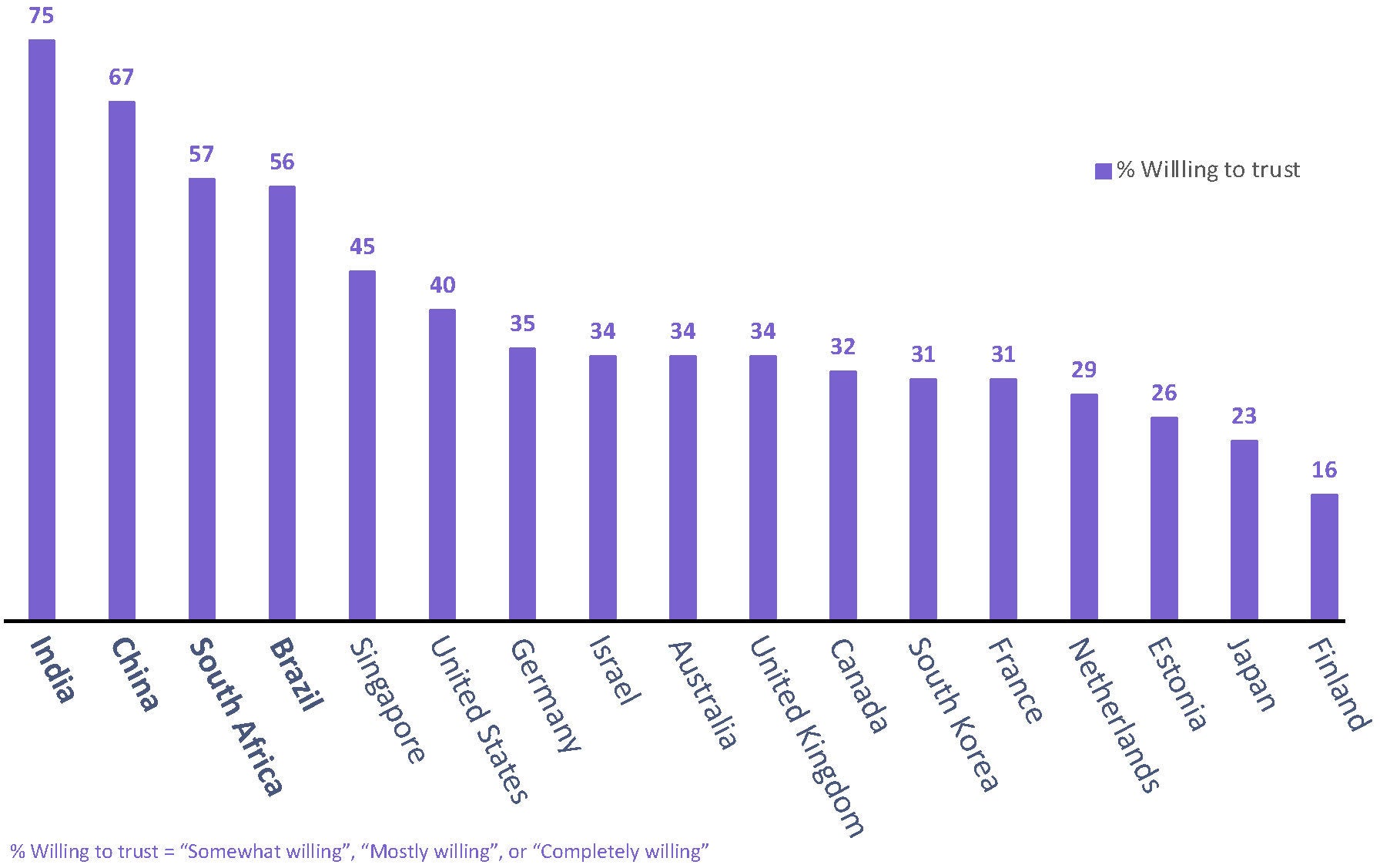

Before a company decides to introduce new technologies, such as AI, into the system, it will also need to consider whether such technologies will compromise the digital integrity of the system and if there are proper cyber defences in place. The box, “Do People Trust AI?” shows the level of trust people across different countries have towards AI.

In Singapore, while about 45 per cent are willing to trust AI, 55 per cent are still apprehensive or unwilling to trust the technology. In general, AI is most trusted in emerging economies and less so in Western and other countries.

The concerns surrounding the use of AI are not unfounded, and addressing them would reduce the likelihood of the use of AI backfiring and wiping out all the good work that the technology has brought to the workplace.

Do people trust AI?

Source: Q1 2024 issue of the SID Directors Bulletin published by the Singapore Institute of Directors

As the use of AI is pervasive throughout the organisation and can have both internal and external implications, policies to mitigate these risks should span both areas. For example, internal policies can include how to manage the use of AI for work to ensure that the information taken from such tools is accurate and appropriate, while external policies can be focused on beefing up the cyber defence of the firm.

“Top Risks of AI” shows some of the risks that could come with the use of AI. Acknowledging and recognising these concerns surrounding the use of AI will help an organisation look for ways that can promote a safe and seamless integration of AI into business operations.

Top risks of AI

Internal Risks

These include risks associated with internal operations and how employees in the firm deal with the introduction of AI into the workplace.

- Confidentiality and intellectual property. As AI learns and relies on data fed to it by the user, safeguarding sensitive or proprietary information is paramount. Establishing robust protocols to prevent unauthorised access and data breaches is crucial for maintaining business integrity.

- Employee misuse and information inaccuracy. AI tools might inadvertently produce misleading or incorrect content, leading to reputational damage or legal issues. Rigorous content validation, comprehensive training and guidelines on using generative AI responsibly are essential to mitigate this risk.

- Talent implications. High-quality, expert output can only be achieved with professional know-how and technical familiarity. Instead of relying solely on AI to do the job, investing in upskilling initiatives can help employees embrace the technology as an interface and use it as a tool to boost the quality of work output.

External Risks

These include implications that AI might bring to the company’s external networks or image, such as its potential to tarnish reputation or damage client trust.

- Copyright. Organisations must navigate the complex legal landscape to ensure they have the rights to use and distribute AI-generated content. Navigating this terrain requires a comprehensive approach that combines legal expertise, technological awareness, and a commitment to ethical AI practices.

- Financial, brand and reputational risk. Inaccurate or offensive content can damage a brand’s reputation and result in legal liabilities. By adopting a vigilant content moderation strategy, organisations can not only protect their brand image but also demonstrate a commitment to responsible and ethical online presence.

- Cyber security. Hackers might exploit vulnerabilities in AI models to gain unauthorised access to data or manipulate generated content. Implementing robust cyber security measures and staying vigilant against emerging threats is crucial to safeguarding sensitive data.

Source: Generative AI models — the risks and potential rewards in business, KPMG International

A holistic risk management strategy

The risks of AI span beyond technology-related functions and are often not isolated. To tackle these challenges, organisations will need a strategy that cuts across different functions – such as legal, ethics and security – to ensure that these risks are tackled comprehensively.

According to the research, six key dimensions must be synergised to ensure the use of AI is responsible.

- Organisational alignment. The purpose of using AI should align with the organisation’s strategy and values. In this regard, developing and updating the resources, processes, policies, and capabilities of people in the company is crucial to executing the organisation’s AI strategy responsibly.

- Data. Data availability, usability, consistency and integrity are assessed to ensure data is sufficiently comprehensive to produce accurate and reliable outcomes.

- Algorithms. Technical features and audit trails of algorithms should be clearly documented. Appropriate assessment should be conducted prior to deployment to ensure accuracy and integrity, with ongoing monitoring to take corrective action when necessary.

- Security. Stringent cyber security protocols should be implemented to prevent adversarial machine learning attacks, hacking, and various cyber threats that could potentially undermine the AI system’s performance and result in a breach of human and legal rights.

- Legal. Both local and global regulations encompassing data and AI should be comprehended and consistently followed, while dynamically monitored for evolving changes.

- Ethics. The purposes of AI systems should be transparently explained to stakeholders, and the outcomes should also be monitored to ensure fairness. Frequently assessing the implications of AI can help stakeholders make informed decisions.

Using such models to create a comprehensive strategy to balance the risks and benefits of AI will help the organisation foster confidence in the use of AI and allow it to capitalise on the benefits of AI.

How will AI continue to evolve?

As technology becomes more innovative and advanced, generative AI will continue revolutionising industries and transforming how we work and live. Some potential future use cases of generative AI can include creating realistic 3D assets with just text, image or voice inputs at the click of a button, personalising product algorithms by extracting data from a user’s Internet search history, or even fully automating processes such as code generation.

With the popularity of generative AI likely to grow, it will become inevitable for businesses to consider the implications of using AI. With much of AI relying on personal or organisation data, is this trade-off worth compromising one’s privacy?

As AI continues to evolve, users will also need to consider that these systems can also have flaws and may introduce potential pitfalls like unreliability, bias and inaccuracy. If not handled well, these issues can cause significant impacts on decision-making and societal well-being.

Ultimately, it will be crucial to carefully consider how the risks of AI can be prevented or mitigated for organisations to strike a balance between the benefits of AI and safeguarding privacy demands. Ensuring that generative AI is used and managed responsibly requires the active involvement of government bodies, technology and society.

It is only by acknowledging the challenges that AI can bring and making a collaborative effort to address them that the potential of AI can be harnessed optimally and pave the way for it to reinvent our digital future.