In an era in which artificial intelligence (AI) is rapidly transforming our world, it has become a critical priority to address AI-related risks to society. This article delves into the systemic risks posed by AI, providing a framework for analysis, showing what’s already being done, and highlighting the urgent need for effective interventions. By examining the roles of governments, organizations, and citizens, we explore how collective action can mitigate these risks and ensure a responsible use of AI.

Understanding the systemic risks of AI

In the past years, AI has emerged as a new system technology that not only affects us in our individual lives, but that also has the power to transform society, for better or worse. The systemic risks of AI have become a topic of interest both in the public debate and among policy makers. However, as AI is a versatile technology affecting various aspects of society, the debate on AI risks often leans towards incidents or skims the surface with generic calls pro or contra AI. This limits the development of a deeper understanding of AI risks that is needed to find proper solutions.

To better understand the systemic risks of AI, it is first important to recognize that AI in many ways does not pose completely new challenges to society. Instead, it strengthens structural trends that are already in existence, such as the problem of disinformation. This realization helps us to see that AI risks are more than a technical problem, namely that it’s connected to broader social and economic challenges, which we will call AI-reinforced threats.

The AI-reinforced threats to society can be seen working on three levels. First of all and most fundamentally, the use of AI can undermine our shared view of reality. The low-cost generation of realistically appearing synthetic text, audio and video may severely impact our ability to separate facts from fiction. Furthermore, the digital platforms, which increasingly serve as mediators for our interaction with the rest of the world, pose a major manipulation risk since they determine what we get to see.

On a second level, the use of AI has the potential to shift the balance of power between and within societies. AI enables mass surveillance practices that can be put to use by states and corporations. Also, the use of AI in military contexts may result in a far-reaching autonomy of both digital and physical weapons.

Finally, on a third level, the use of AI impacts the human rights and well-being of large groups within society. This category comprises a number of different risks. Automated decision-making can lead to ‘computer says no’ situations without the proper means of appeal and redress. The statistical foundations of AI may result in a marginalization of people who deviate from the norm. Our dependence on technology is deepened further as AI is taking over ever more mental tasks. Generative AI poses risks to intellectual property. And finally, AI enables a hyper personalization of digital content, allowing the exploitation of human psychological weaknesses, tailored to the individual.

Where do we currently stand in addressing AI-reinforced threats?

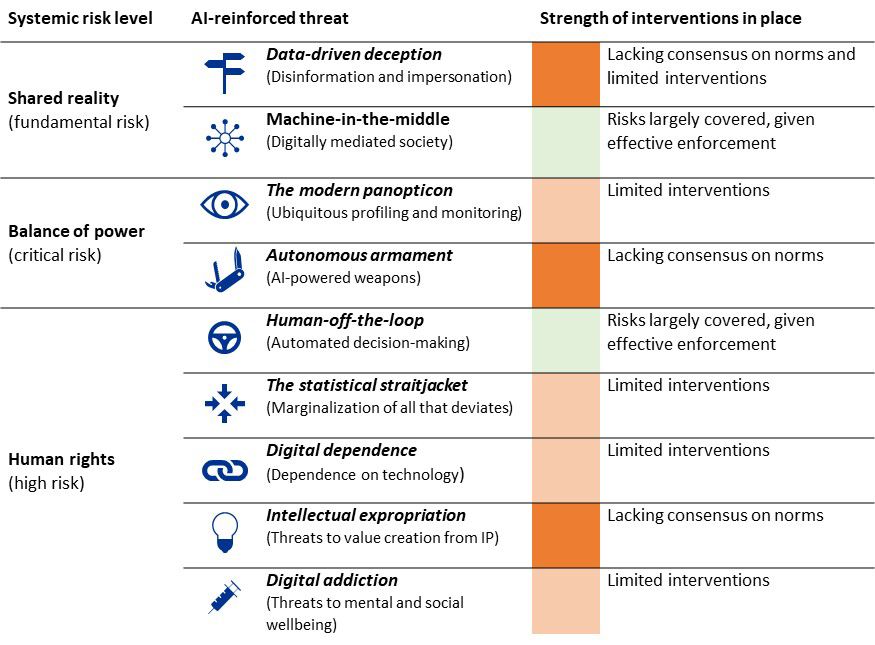

We are not powerless against the systemic risks of AI. In the EU, a serious attempt is being made to mitigate AI risk through legislation, resulting in landmark regulations such as the AI Act, Digital Services Act, and Digital Markets Act. These laws mainly try to manage systemic risks of AI by putting mandatory requirements on the development process and banning or limiting certain use cases. However, they do not affect each AI-reinforced threat in the same way. The same goes for other types of societal interventions, such as education or voluntary codes of practice. A high-level assessment of the effectiveness of current interventions for each of the AI-reinforced threats is provided in Figure 1.

Figure 1 - High-level assessment of the current interventions in place per AI-reinforced threat

Based on our observations, we can conclude that for most of the systemic risks of AI, we still face concerns regarding the scope and effectiveness of the current interventions in place. For a number of threats, we even lack the consensus and norms to take proper action. This also applies to the most critical threats that affect our shared view of reality and the balance of power. Addressing these threats proactively is of key importance to safeguard our liberal democratic institutions and ensure our long-term capability as a society to cope with the developments around AI.

What to do next? Action points for governments, organizations and citizens

Our analysis has provided a framework for thinking about the systemic risks of AI and a view on the priorities on the road ahead. The pivotal remaining question is: Who should act? As primary stakeholders, governments, organizations and citizens all have an important role to play.

Governments

Governments occupy a precarious position regarding the systemic risks of AI. They not only hold the legal authority to regulate AI usage, but are also some of the most powerful entities capable of causing significant harm. Of course, a government is not a single entity, but a collection of institutions. In view of our topic, we distinguish between the parts of the government that are involved in legislation and regulation and the executive branch of government. In the area of legislation and regulation, we suggest the following action points:

- Continually reassess the bans or moratoria already in place (e.g. via the AI Act) in light of new AI developments. Insofar a ban is not deemed feasible, consider developing specific standards and requirements for critical domains that are exempted from current legislation, such as national security.

- Apply the precautionary principle to all developments related to AI-powered disinformation and impersonation and autonomous weapons. Ensure democratic institutions and processes are as robust to destructive forces as possible.

- At the same time, specifically for autonomous weapons, ensure developments beyond the borders are closely monitored and acted upon. Treat AI capabilities as a key asset for strategic autonomy.

- Update or clarify any legislation affected or outdated by the advent of AI.

- Curtail the power of market players when they become too dominant in one of the key (social) infrastructures and prove not to be amenable to regulation.

- Require organizations to perform a thorough risk assessment throughout the AI lifecycle and include clear guidance on what is expected from such assessments.

- Set and enforce standards for critical uses of AI. This can take the form of mandatory design principles or technical requirements.

- Set up or support AI literacy programs to adequately inform citizens about AI.

- Set up or strengthen the regulatory bodies to monitor compliance with AI-related regulation, and ensure fines and penalties are sufficiently high to have a deterrent effect.

The executive branch of government is where AI is being used in services toward citizens. We suggest the following action points:

- Ensure effectiveness of AI use is proven before deployment, or that at least a sunset clause is required.

- Be prepared to provide transparency over the use of AI.

Organizations

Organizations are fundamentally incentivized to act in the interest of their most important stakeholders, such as shareholders or supervisory bodies. However, this does not mean they have a passive role and can only be expected to act under pressure of laws and regulations. Proactively addressing the systemic risks of AI can make sense from a strategic perspective. Acting in the interest of society helps to preempt stringent and costly regulation. Furthermore, it can help to remain attractive to employees and customers. We suggest the following action points, both for commercial and noncommercial organizations:

- Invest in codeveloping industry standards and practices that both improve the overall quality of AI applications and preempt further legislative actions.

- Obtain insight in the portfolio of AI applications in development and operation as a basis to ascertain compliance with AI-related regulation.

- Implement a lean but effective risk assessment process as part of AI application development, to ensure alignment with organizational goals and prevent blowback on ethical or legal issues later on. This process should cover technical, legal and ethical risks and dilemmas. With regard to systemic risk, the trends and threats described in this article may be considered as part of the assessment.

- Establish fit-for-purpose AI governance practices, aligned to the risk profile of the organization’s AI portfolio. This includes topics such as data management, AI literacy, and monitoring of application performance.

Citizens

Citizens (and consumers) are often on the receiving end of AI mishaps and the harsh truth is that, individually, they may not be able to stand up against the state or a large organization. However, this does not justify a passive approach. In the end, it is the collective of citizens that defines public values and norms and (indirectly) guides the direction of government. It is a matter of getting involved and organized. Our suggested actions:

- Get informed and contribute to informing others about AI, its applications, risks and possible mitigations at the level of the individual.

- Get organized – be it via NGOs, unions, political parties or otherwise – to influence the public debate, government and organizations.

- Take active part in the social, economic and ethical discussions that are needed to shape the values and norms that will determine what our AI-infused society will look like, now and in the future.

Conclusion

The systemic risks of AI form a multifaceted challenge, that can best be understood in terms of the broader societal threats that are aggravated by the introduction of AI. These threats relate to basic human rights, the balance of power within society and even our shared concept of reality. However, we are not powerless against these threats. We see that – in the European context – action is already being taken via legislation aimed at AI and digital services and markets. Not all threats are mitigated yet and we can reasonably expect many more AI innovations to introduce new societal challenges. Being able to respond to such challenges effectively and in line with human rights is of paramount importance. We should therefore be extra vigilant regarding those AI-reinforced threats that directly undermine our liberal democratic institutions. Shaping the future development of AI is a collective responsibility, and everyone has a role to play in this important endeavor.

This article is an extract from the Compact Magazine article: Shaping the synthetic society: What’s your role in safeguarding society against the systemic risks of AI? (Issued: October 2024). To read the full article, click this link.