With a shift in focus to productivity, now is the perfect opportunity to take advantage of the technological advances and the compelling use cases for the application of AI in non-clinical areas. While it has a reduced risk profile compared to clinical AI, there are critical success factors to ensure non-clinical AI solutions are deployed effectively and safely.

Person centric healthcare

Imagine if, during a consultation, a doctor could spend their entire time directly interacting with patients. There would be no time spent time spent typing into an Electronic Patient Record or committing every detail to memory. How much time would be saved, and how much more focus could be given to the patient?

If appointments were designed and scheduled to maximise clinical care and patient convenience with timely and personalised communication. How much would attendance and patient engagement increase?

And what if intelligent virtual assistants could answer finance and HR questions, helping staff to complete mundane and routine tasks? How much time could finance and HR teams release to focus on more complex value adding work? What if the recruitment processes were more streamlined with HR teams automatically getting additional insights to help them select the best candidates and determine the best training for future needs? How much would job satisfaction and retention increase?

Clinical AI is complex

While attention has been focused on the more ‘interesting’ Clinical AI, the above questions highlight the significant opportunities for AI to improve the non-clinical aspect of healthcare.

Clinical AI is complicated. There are not many cases of successful scaled implementation with limited success stories in a small number of areas. For example, in Radiology Brainomix supports the interpretation of brain images and in selecting the correct treatment for stroke patients.

Scaling clinical AI solutions is difficult. They need to navigate several regulatory/compliance issues as well as ethical considerations. The level of accuracy and explainability needed is extremely high, and the stakes of getting it wrong are significant with patient harm a real possibility.

Scrutiny of clinical AI is very high, and the risk profile leads to longer lead times, complex evaluation requirements and higher investments to get started.

There is a growing emphasis on improving productivity

The NHS is currently facing several operational challenges and is expected to deliver a 2% productivity improvement[i]. The 2024 Spring budget announced £3.4bn to help improve productivity in the NHS, with £1bn towards transforming the use of data including pilots on how AI can improve non-clinical functions[ii].

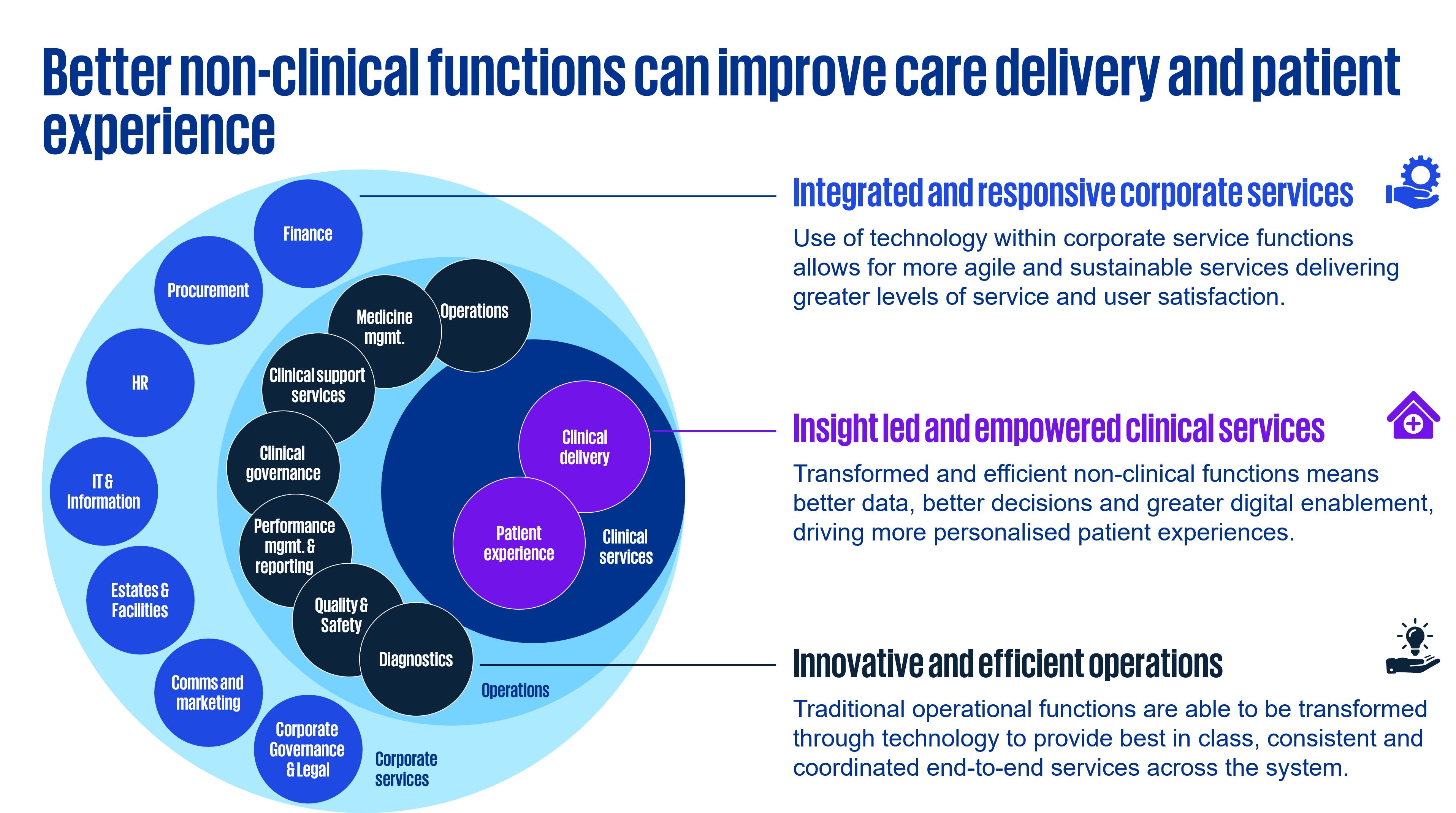

There are 3 key functionality capability areas in the NHS:

Better non-clinical functions (corporate services and operations) will underpin productivity gains, improve care delivery and patient experience, and drive productivity and overall efficiency.

Where can AI help across healthcare non-clinical areas?

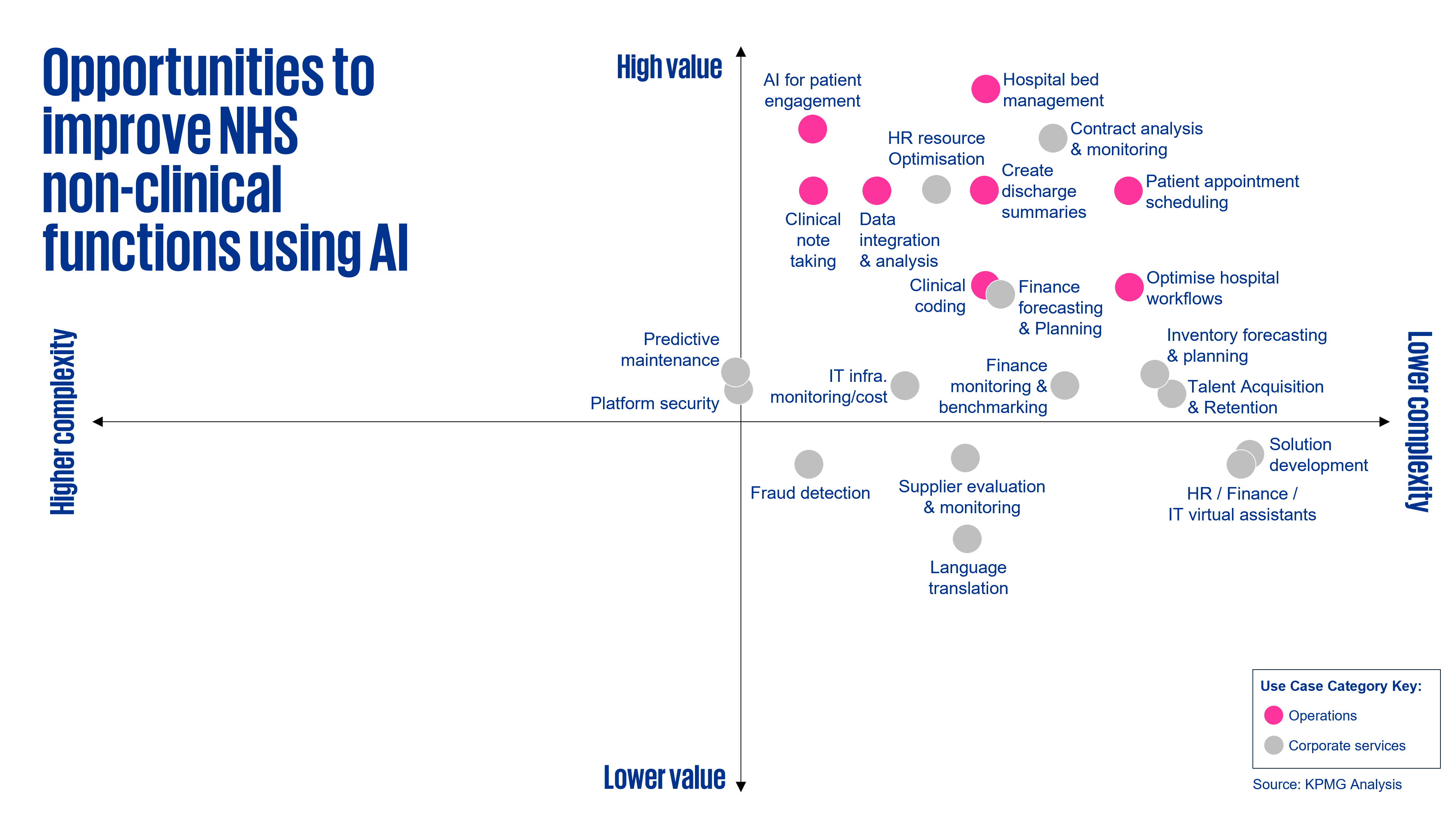

While the specific outputs for each organisation may differ, the prioritised use cases based on highest value and lowest complexity to explore include:

For example, AI can capture the dialogue between the doctor and the patient and translate it meaningfully into a structured patient record.

AI can optimise demand and capacity when scheduling appointments and take into consideration individual patient characteristics (i.e., working parents). It can also customise the language and phrasing of the communication to patients.

There are some AI solutions in these areas being trialled in the NHS. These include:

- An AI platform co-developed by Deep Medical and NHS staff that predicts which appointments may be missed based on several data points including weather and jobs. The suggested optimal time slots have demonstrated a 30% reduction in Did Not Attends (DNA) in pilots conducted in Mid and South Essex NHS Foundation Trust[iii]

- A WhatsApp based solution will be piloted in London to help patients and staff better schedule cervical cancer screening appointments[iv]. The Asa AI assistant will support scheduling of appointments, personalise language and the wording of messages to patients, and help answer questions patients and families may have

The context from across the wider public sector (and beyond)

Productivity is a key theme across the public sector. In March 2024, the Chancellor announced plans to deliver £1.8bn benefits through improving public sector productivity[v].

AI is seen widely as an important tool to underpin the public sector productivity agenda. The recently published NAO report on ‘Use of artificial intelligence in government’ provides interesting insights[vi]. It highlights an analysis done by CDDO in 2023 which indicated a third of the routine tasks in the civil service could be automated.

Due to a variety if reasons including lower risk tolerance, underlying data infrastructure challenges and scarcity of required skills, the public sector has not been able to realise the full potential of AI. Based on a survey from Autumn 2023, only 37% of governmental organisations had deployed AI, and usually only 1 – 2 use cases each.

It was interesting to note, due to parallels to healthcare and challenges with clinical AI solutions, that the NAO report also highlighted that organisations were mostly deploying AI solutions that improved internal processes, supported operational decision making and supported research/monitoring rather than those that directly engaged with the public. For example, the HM Land Registry used AI to reduce the manual tasks a case worker had to do complete.

The above focus on non-engagement with the public is despite a backdrop of citizens becoming more digital. A recent KPMG survey (CEE) has found that citizens of all ages are now open to using digital channels[vii].

Examples from the private sector is showing the impact AI can have on productivity. For example, a recent Proof of Concept (PoC) KPMG completed with a retailer showed a 20% decrease in colleague workload and a 25% cost reduction in productivity by using GenAI driven technology (vs non-AI productivity plans).

What does the NHS need to do to successfully adopt the above use cases at scale?

Key success factors

In our experience, there are 3 key elements where organisations have been able to identify, develop and deploy AI to maximise benefits and drive productivity:

1. Integration into the workflow

Even for non-clinical areas, considering existing workflows and processes is important. AI solutions need to integrate with other systems and ways of working (including data collection and re-entry back into other ‘main’ systems), otherwise it creates more work and takes more time overall.

2. Identifying and tracking benefits

Given the importance of improved efficiency and productivity, it will be essential to baseline performance and have mechanisms to monitor benefits delivered. It will be sensible to pilot solutions, evaluate them and then, dependent on outcome, decide whether and how to scale.

Roadmaps should be self-funding wherever possible, starting with a phase where quick wins improve services and release cash. This could then fund the subsequent phases which consist of increasingly more complex and ambitious opportunities. This iterative approach will gain buy in and maintain momentum throughout the transformation.

3. Taking a proportionate approach to evaluation and risk

Non-clinical AI solution evaluation call for a different approach to clinical AI solutions. For example, they are unlikely to need Randomised Control Trials. Pragmatic evaluation methodologies are required to balance rigour and execution timelines to align with the fast-changing AI technology landscape.

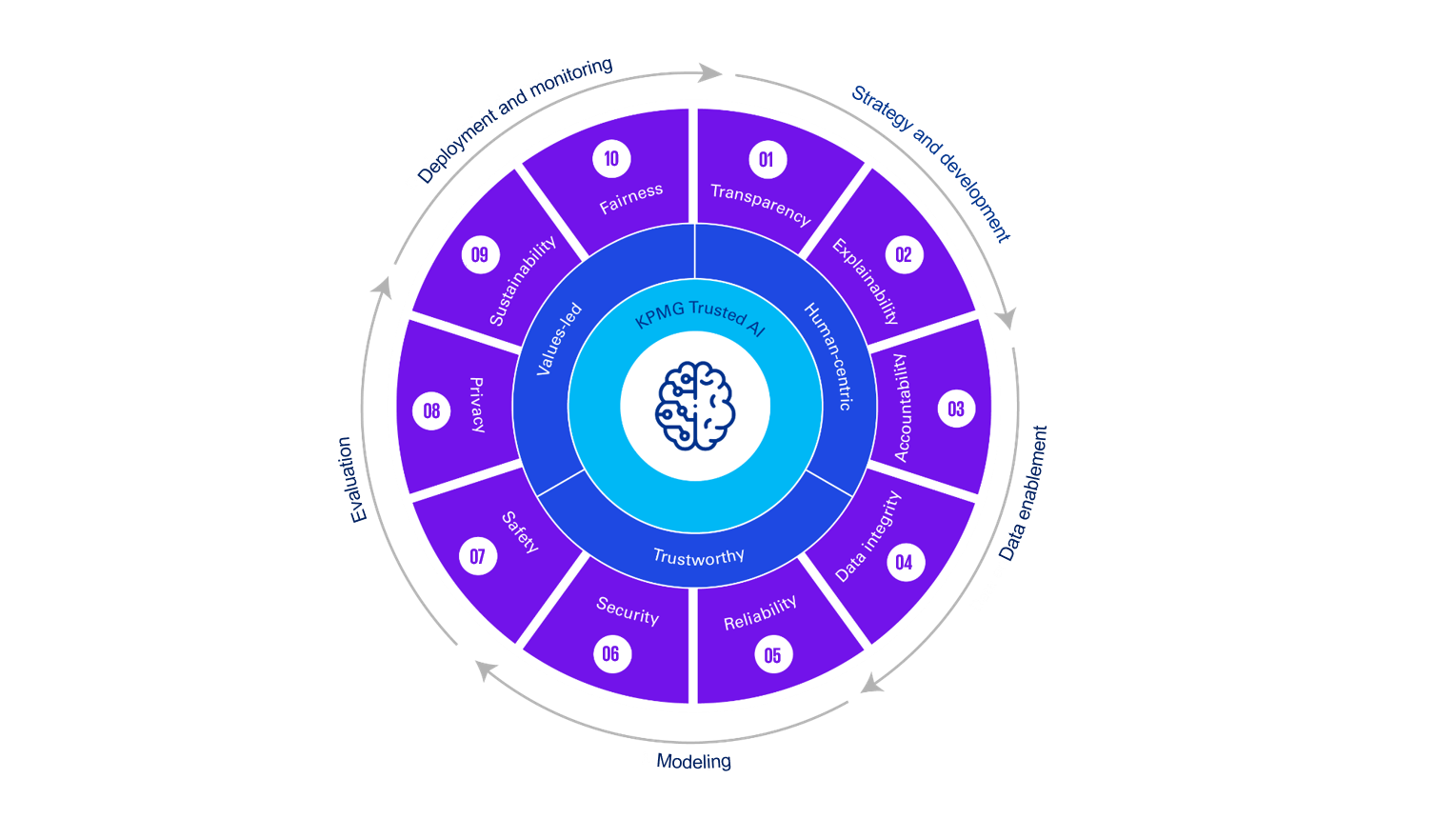

Manging risk remains a critical requirement for non-clinical AI solutions. The KPMG Trusted AI approach is our methodology of designing, building, deploying and using AI strategies and solutions in a responsible and ethical manner. It consists of 10 key elements[viii]:

How KPMG can help?

We help clients in a variety of ways including:

- Co-producing AI strategies and helping identify use cases

- Helping them identify and work through the legislative, governance and risk implications of using AI, and supporting implementation of appropriate risk management systems and guardrails

- Helping bring use cases to life through developing PoCs

- Helping organisations who have developed their own AI solutions with assurance/validation and in scaling those PoCs across the organisation

Please get in touch with Janak Gunatilleke if want to discuss further.

Contributors

Jonathan Orritt | Torsten Fritz | David Manchester

Our AI and machine learning insights

Something went wrong

Oops!! Something went wrong, please try again

Get in touch

Discover why organisations across the UK trust KPMG to make the difference and how we can help you to do the same.

[i] The 2024 Budget and NHS productivity - GOV.UK (www.gov.uk)

[ii] Spring Budget 2024 (HTML) - GOV.UK (www.gov.uk)

[iii] NHS England » NHS AI expansion to help tackle missed appointments and improve waiting times

[iv] AI-powered WhatsApp messaging cleared for NHS pilot | pharmaphorum

[v] £1.8 billion benefits through public sector productivity drive - GOV.UK (www.gov.uk)

[vi] Use of artificial intelligence in government (govx.digital)