Supervisory Feedback

Financial institutions were put on notice, at the end of last year: there is significant work still to do on scenario testing. In particular, the Regulator pointed to:

- Lack of definition around plausible but severe scenarios

- Lack of testing with 3rd and 4th parties

- A focus on asset recovery, rather than impact mitigation

- Lack of data to support assessment of whether impact tolerances are breached

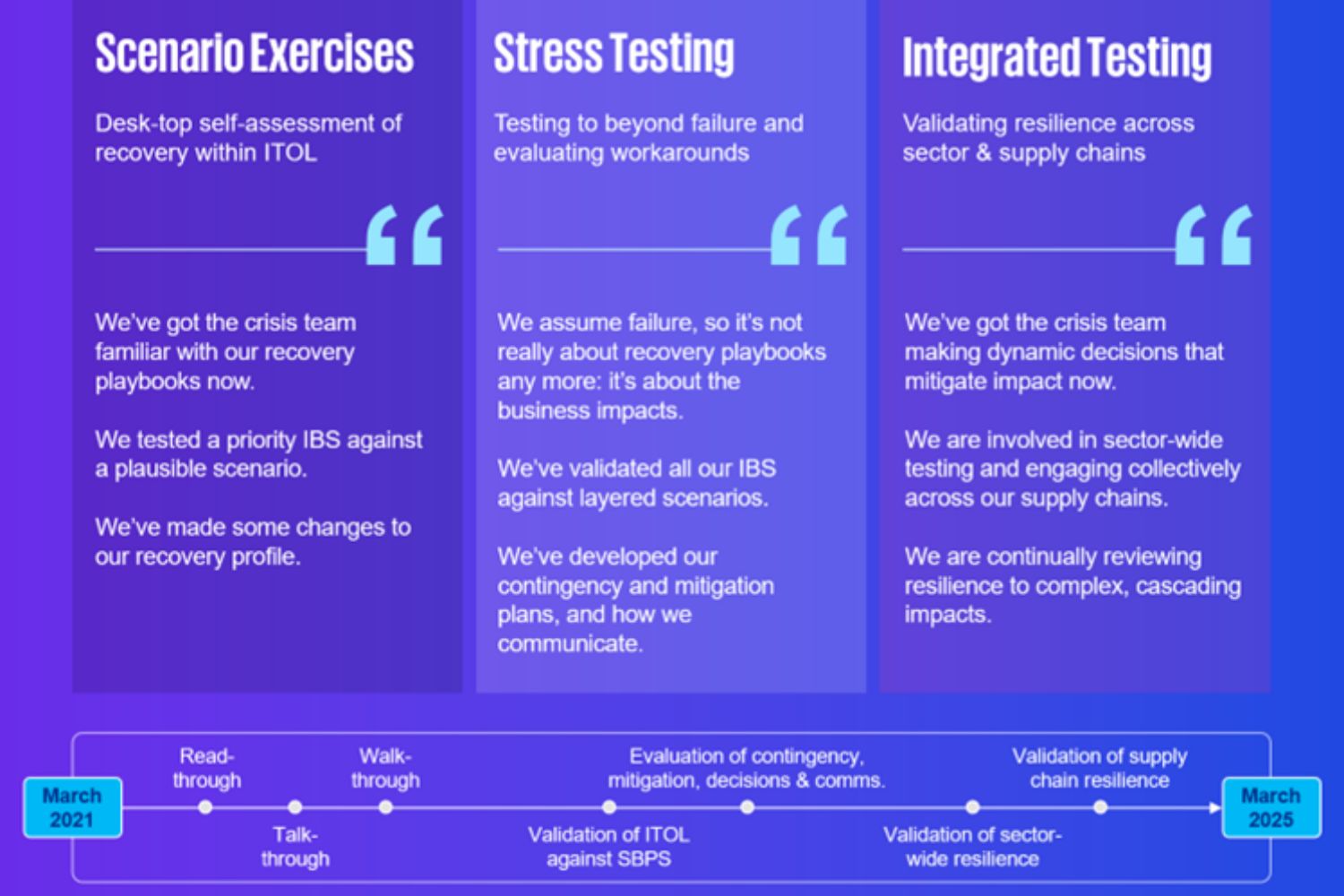

Having worked with many firms on this journey towards compliance, we see a maturity scale that ranges from desk-top asset recovery testing, to integrated resilience testing across sector and supply chains.

Over recent weeks we’ve held roundtable events for clients across the UK. Firms have shared their experience and views on the real challenges of implementing a scenario testing regime. Many firms say they get stuck in a comfort zone around desk top exercises, focused on asset playbooks and recovery. A few say they have moved up the scale to focus on contingency plans, mitigation plans, decision-making and communication. Several also recognise elements of what we are calling stress testing and integrated testing, but acknowledge the need to do more in those areas.

Success Factors

Here are some of the success factors we heard clients talking about:

- Moving from playbooks to decision-support: recognising that pre-determined responses don’t cover the complexity of crisis response hence the need to consider what processes and what information is required for effective decision-making

- Moving from asset recovery to consequence management: recognising that the operational response to, and recovery from impact, needs to be complemented by a more forward-looking, wider view on 1st, 2nd, and 3rd-level consequences for the business, the sector and customers

- Moving from assumptions to validation: recognising the need to provide decision-makers with data and being able to demonstrate data-driven decision-making in test reports. Finding innovative ways of mass data-gathering (possibly using tooling) and use of challenge by red, blue, or purple teams

Talking Points

And here are some of the questions raised by clients as they seek to course-correct following the Regulator’s 2023 supervisory feedback:

- What does a good scenario library look like? How complex or simple do they need to be and how do we define plausibility?

- If we are asked to evidence IBS playbooks, what does a good playbook look like? What does it need to comprise and how does it fit with an enterprise-wide response plan?

- If we are asked to shift the emphasis of scenario testing from asset recovery to business impacts, what do good tests look like and how do we deliver them efficiently?

- How do we realistically involve 3rdand 4th parties in testing? And how can we make tests efficient? What can we expect on testing from Critical Third-Party policy this year?

We’ll be offering some insights on these issues over the next few weeks.