The EU AI Act marks a pivotal shift in the regulatory landscape, aiming to ensure that AI systems deployed within the EU are safe, transparent, and aligned with fundamental rights. It is a risk-based regulatory framework that classifies AI systems into four categories: unacceptable risk, high risk, limited risk, and minimal risk. Compliance is not merely a legal obligation – but a strategic imperative that affects trust, brand reputation, and long-term competitiveness.

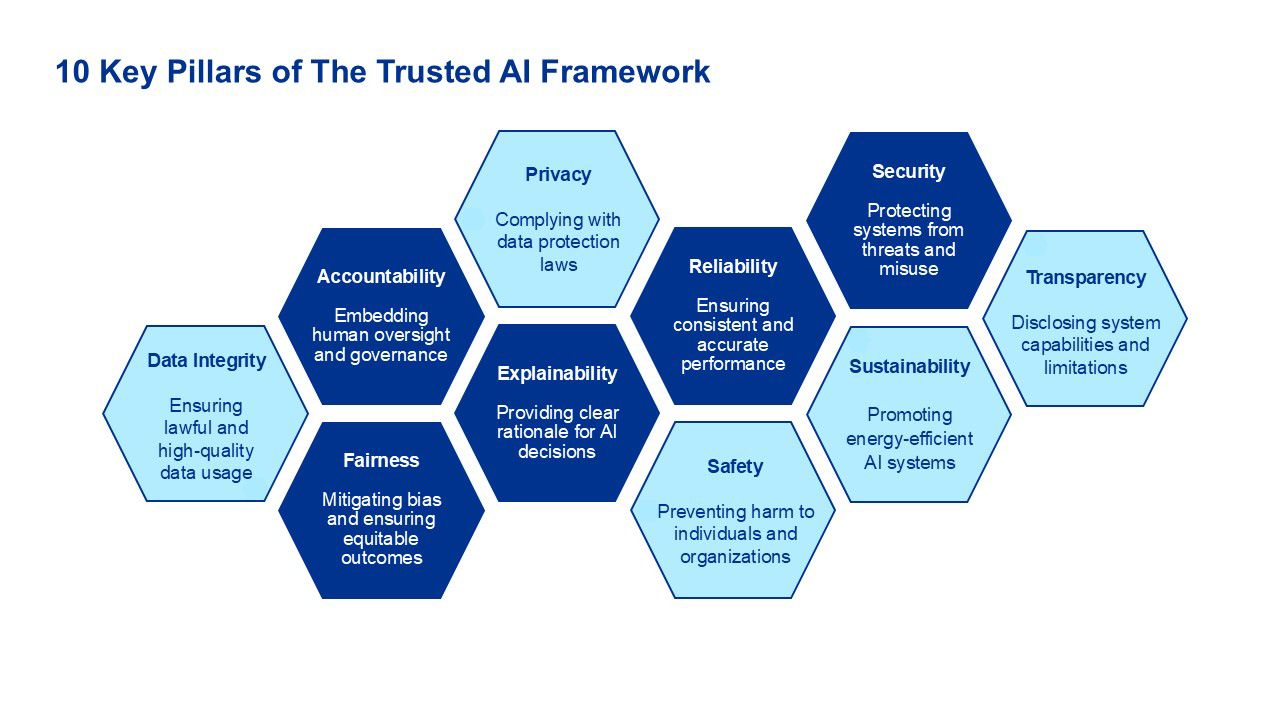

Key implications include mandatory conformity assessments for high-risk AI systems, transparency obligations for AI systems interacting with humans, robust data governance and documentation requirements, and human oversight together with accountability mechanisms. KPMG’s Trusted AI Framework is designed to align with these requirements, offering a structured approach to governance, risk management, and ethical deployment.

Author

Alexander Zagnetko

Manager, Process Organization and Improvement

These principles are operationalized through KPMG’s Trusted AI Council, which oversees policy development, risk assessments, and regulatory alignment.

Evaluating AI Systems Under the EU AI Act

When assessing risks, it’s important to evaluate how a tool or technology is used, considering its data sources, decision-making processes, and potential societal impact. Key steps include:

- AI Maturity Assessment: Evaluate readiness across governance, data, technology, and culture.

- Use Case Prioritization: Identify high-risk applications and assess their regulatory exposure.

- Gap Analysis: Compare current practices against EU AI Act requirements.

- Control Effectiveness Review: Assess safeguards, monitoring mechanisms, and escalation protocols.

When selecting the right AI solutions, organizations should strive to balance business value, technical feasibility, and regulatory compliance. It is therefore important to consider several questions: Does the solution support the organization’s goals? Are the data sources legal, ethical, and of high quality? Is the technical environment secure and scalable? Are external vendors compliant with AI governance standards?

When using artificial intelligence, several additional aspects need to be considered. If AI significantly affects people’s access to services, employment, credit, education, or healthcare, it represents a high risk and requires stricter controls. Similarly, if it can generate content, mimic humans, or create so-called deepfakes, transparency measures must be implemented, including clear labelling, verifiability, and content moderation. For autonomous AI agents interacting with systems or data, safeguards should be established - limiting access, using isolated environments, relying only on approved tools, and ensuring continuous monitoring.

Regulatory compliance cannot be treated as a mere afterthought; it must be fully integrated into the entire AI development lifecycle - from automated comparison of internal policies with regulatory requirements, through thorough documentation and traceability of data and decisions, to ongoing monitoring with real-time alerts and regular training on risks and regulatory expectations.

Finally, artificial intelligence introduces new cybersecurity risks. Organizations are therefore advised to protect the access points and interfaces through which AI communicates with customers, maintain control over the data and the environment in which the system operates, and carefully assess the reliability of their vendors and partners.

Frequently Asked Questions

Q: We’re integrating a third party LLM via API - do GPAI obligations hit us, or just the model provider?

A: Article 53 targets model providers (documentation, copyright policy, training‑data summary). But integrators (you) still shoulder system‑level duties, especially where the AI system is high‑risk (risk management, oversight, logs, CE‑marking if applicable). Require providers’ docs and build your own controls.

Q: How do copyright and training data affect us if we’re only deploying?

A: You must ensure your upstream model provider has a copyright policy and respects TDM opt‑outs; your contracts should make this auditable. If you fine‑tune or retrain, you inherit those obligations.

Q: What makes a model “systemic risk,” and why do we care?

A: A compute‑threshold creates a presumption (e.g., 10²⁵ FLOPs), and the Commission can designate models based on market impact. Systemic‑risk models face stronger obligations (evals, risk mitigation, incident reporting, cybersecurity). If you rely on such models, vendor selection and monitoring must reflect this.

Q: Will harmonised standards be ready before our 2026 high risk deadlines?

A: The Commission and JRC expect delays; you should plan on using the GPAI Code, ISO/IEC 42001, and NIST AI RMF as interim scaffolding while mapping controls to emerging CEN‑CENELEC outputs.

Q: How does this all relate to GDPR and the Data Act?

A: The EDPB is clarifying GDPR expectations for AI (lawfulness, fairness, transparency, accuracy), and the Data Act (applying from Sept 12, 2025) reshapes data access, portability, and cloud switching - especially relevant for model training and enterprise AI stacks. Align your AI and privacy governance to avoid duplicate audits.

The EU’s AI rulebook is no longer a concept - it’s becoming a compliance regime with teeth, plus a growing toolbox. The companies that win here won’t just “check the box” - they’ll operationalise trust: predictable launches, better regulator relationships, and faster enterprise sales. For European scale growth - or global brands selling into Europe - that’s the moat worth building.

KPMG’s approach to EU AI Act compliance is grounded in trust, transparency, and transformation. By leveraging its Trusted AI Framework and sector-specific expertise, KPMG empowers organizations to not only meet regulatory requirements but also unlock the full potential of AI responsibly.

Contact us

Should you wish more information on how we can help your business or to arrange a meeting for personal presentation of our services, please contact us.