Although Artificial General Intelligence (AGI) remains beyond the horizon, existing AI models and solutions - such as Large Language Models (LLMs) and Generative AI - already raise profound questions. Unlike purely rule-based systems, these models can learn from data and exhibit emergent behaviours in their outputs. This complexity makes the AI market uniquely sensitive to regulation compared to previous IT breakthroughs. Defining clear limitations and effective controls thus remains a significant challenge.

The EU AI Act is a step toward responsible AI governance, but its success hinges on implementation agility, regulatory clarity, and support for innovation ecosystems. Bureaucracy is a real risk - but so is under-regulation. The challenge is not choosing between ethics and innovation but designing a dynamic framework that evolves with technology while safeguarding societal values.

Author

Alexander Zagnetko

Manager, Process Organization and Improvement

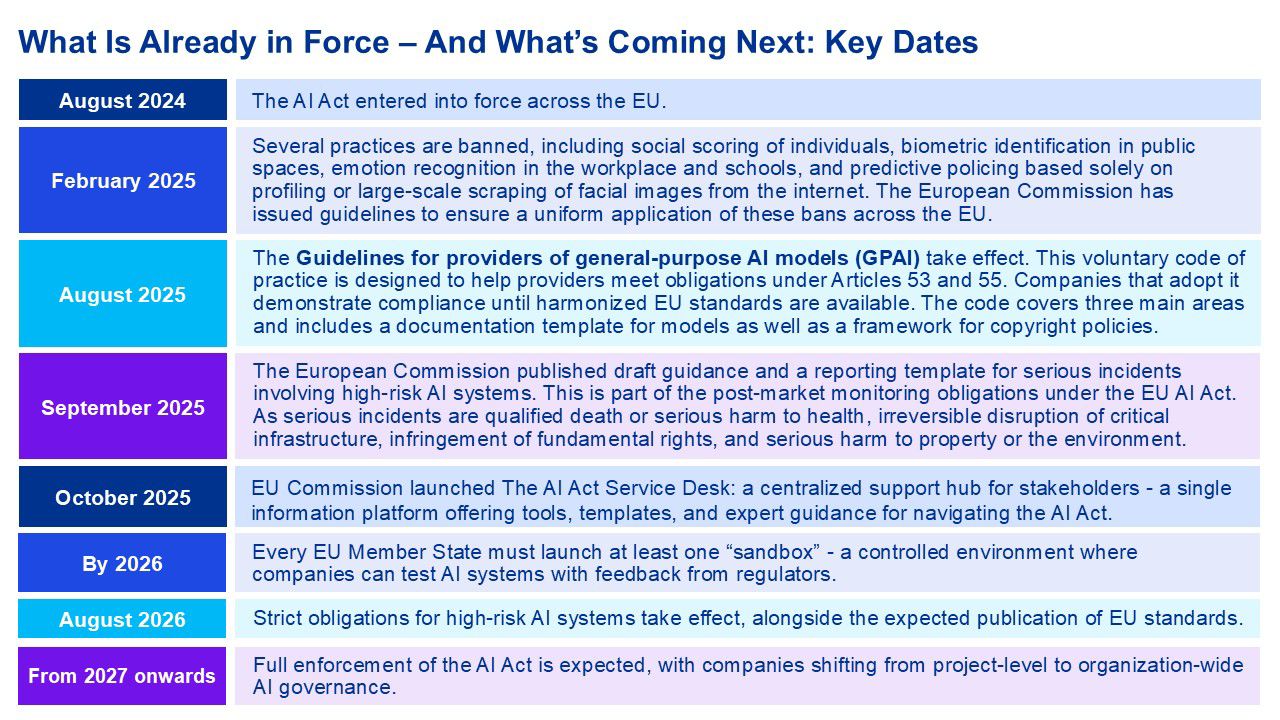

What Compliance Really Looks Like Now

The EU Artificial Intelligence Act (AI Act) entered into force on August 1, 2024, inaugurating the world’s first comprehensive, risk‑based framework for AI. Since then, the regulatory gears have turned quickly: the first prohibitions kicked in on February 2, 2025; new obligations for general‑purpose AI (GPAI) models start applying from August 2025; and most high‑risk system requirements hit in August 2026, with full maturation by 2027. A new European AI Office now coordinates oversight - particularly for GPAI - while the Commission and standards bodies accelerate guidance, codes of practice, and harmonised technical standards.

For executives, the message is clear: treat AI compliance like a productization of governance. Build a living AI control system - risk triage, model cards and data provenance, human oversight at decision points, secure MLOps, red‑teaming, and post‑market monitoring - then integrate it with your broader privacy, cybersecurity, and safety programs (GDPR, NIS2, Product Safety, and the Data Act). That’s how you manage risk and keep shipping features in a rules‑heavy market that is now Europe’s default.

What “Risk Based” Means in Practice

EU AI regulation is built on a risk-based approach - the stricter the potential impact of AI use, the stricter the rules.

Unacceptable risk (e.g. social scoring or manipulative AI) is entirely prohibited. The European Commission has issued guidance to ensure these bans are applied consistently across the EU. If your project falls into this category, it must be stopped or fundamentally redesigned. High-risk AI covers areas such as recruitment, education, credit scoring, healthcare, and critical infrastructure. These systems must comply with strict requirements on risk management, data governance, transparency, human oversight, and cybersecurity, and can only be placed on the market after conformity checks and obtaining CE marking, Limited-risk AI, such as chatbots or generative AI tools, must clearly inform users that they are interacting with a machine and label AI-generated content.

Violations of the AI Act can lead to significant fines - up to €35 million or 7% of global annual turnover for the most serious breaches, with lower penalties for less severe infringements.

Intersections That Matter

The use of AI in Europe is closely tied to other EU laws. GDPR requires that personal data processed with AI has a legal basis and is handled fairly, transparently, and accurately. The Data Act (effective September 12, 2025) will strengthen data portability, make switching between cloud providers easier, and improve interoperability - especially important for digital assistants. Cybersecurity obligations should be based on the analyses and recommendations of ENISA, the EU’s cybersecurity agency, which provides specific guidance for AI-related risks and safeguards. Finally, the AI Act goes hand in hand with investments in high-performance computing (e.g. EuroHPC supercomputers) to support the safe development and deployment of AI.

Thinking About Automating with AI: What to Consider

The new EU Artificial Intelligence Act (AI Act) doesn’t tell you which AI model to use, but it fundamentally changes how you should choose one. In other words, technology is no longer just about functionality - it also needs to comply with rules, ensure safety, and earn trust.

Let’s look at five common areas where AI is used and what to keep in mind:

Marketing copy, chatbots, and customer support responses must be clearly labelled as AI outputs. Use well-established models with solid documentation and proper copyright compliance. Set up filters and control mechanisms to prevent inappropriate outputs, and make sure humans can intervene when necessary.

Areas such as hiring, loan approvals, or assessing social benefits fall under high-risk. These require specialized models with precise documentation. If you use general-purpose AI (GPAI), add mechanisms that explain the decisions made and allow for human oversight. Bias testing and ongoing monitoring should also be part of the process.

In industries like manufacturing, transportation, or healthcare, AI must fit within strict product safety frameworks. Choose providers who can demonstrate compliance with European standards (CE marking) and plan for independent evaluation by certification bodies. Systems should include backup mechanisms and be closely monitored even after deployment.

Tools supporting productivity, coding, or research offer huge potential but raise data-related concerns. Use models that keep company data secure. Ensure your provider shares information on training datasets and allows data portability under the new European Data Act. Privacy safeguards and staff training are essential.

Systems that plan actions and use multiple tools independently are considered the highest risk. Development should start in “sandboxes,” regulatory environments where testing is done under official oversight. They must undergo rigorous safety testing and include lists of allowed functions and emergency “kill switches.”

Key Takeaways for Leaders

- Build once, use everywhere. Stand up an AI management system (ISO/IEC 42001) that unifies privacy, safety, and security controls. This reduces re‑work across HR, credit, and infrastructure use cases and prepares you for harmonised standards.

- Make suppliers part of your control surface. Demand Annex XII‑style documentation, training‑data summaries, and copyright policies from GPAI providers. Bake these into contracts and acceptance criteria.

- Engineer human oversight, don’t just “require” it. Document who intervenes, when, and how; train reviewers; test the oversight loop as you would disaster recovery.

- Treat red‑teaming as continuous assurance. Adopt the GPAI Code’s adversarial testing expectations and extend to your downstream systems; align with NIST AI RMF “Measure/Manage” functions.

- Prepare for model governance at scale. Centralise model cards, evals, and incidents; syndicate to business units. Automate logging and traceability to satisfy transparency obligations.

- Use sandboxes strategically. Pilot complex automations (e.g., agents) in state‑run AI sandboxes; the documentation can reduce compliance friction at launch.

- Price penalties and brand risk properly. With fines up to €35m/7%, plus litigation and reputation drag, your ROI calculation should include compliance debt and its interest.

Contact us

Should you wish more information on how we can help your business or to arrange a meeting for personal presentation of our services, please contact us.