The EU Act was signed into law on 13th June 2024 and came into force on 2nd August 2024. It establishes a clear framework for developing and using AI systems across the EU, taking a risk-based approach to promote responsible AI practices while protecting citizens’ rights.

The EU AI Act follows a phased approach to implementation, providing organisations with structured timelines to comply with its provisions.

The first milestone occurs on 2 February 2025, when the Act’s prohibition on unacceptable risk AI systems will come into force, raising an important question:

What is AI, and how do we define it?

What seems like a simple question will be hard to answer, as AI is a technically complex and multifaceted concept.

How does your organisation define AI?

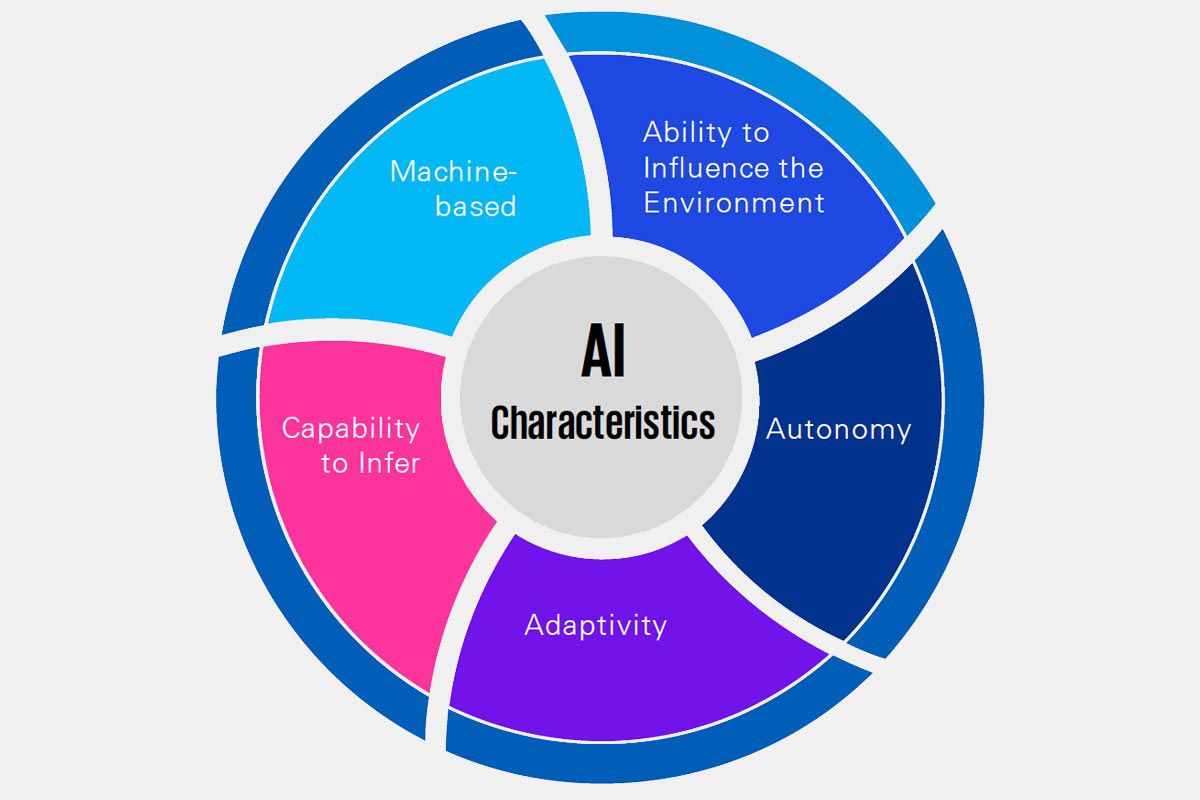

Defining AI requires understanding not only its technical components but also its ethical and societal implications as it continues to evolve. The EU AI Act offers a clear framework for understanding AI and defining the characteristics that are inherit of an AI System. It is important that the organisation clearly understands each of the characteristics of AI as outlined in the Act.

Which AI systems are currently in use?

It is essential for organisations to identify all AI systems inuse, whether developed in-house or provided by a third-party. This inventory should include, but not be limited to, references to bias assessments, system sources, the type of AI system, robustness criteria and the principles used for internal and external validation. Implementing AI risk classification criteria across the AI inventory is required to determine the applicable regulatory obligations.

This inventory will likely require all business functions to evaluate their workflows and systems to identify areas where AI has been integrated, including tools like predictive models, advanced analytic tools, decision making algorithms and third-party software platforms. Therefore, having a clear definition of AI is vital, so that the functions have a clear understanding of what should and should not be included.

How are AI systems categorised in your organisation?

The EU AI Act has 4 classifications of Risk; On 2 February 2025, several key provisions of the EU AI Act will begin to apply, particularly those related to prohibited AI practices that pose unacceptable risks.

These include:

- Manipulative Techniques: AI systems that use subliminal techniques to manipulate human behaviour or decisions will be banned.

- Exploitation of Vulnerabilities: AI systems designed to exploit vulnerabilities of specific groups (like children or disabled individuals) will be prohibited.

- Biometric Categorisation: Systems involved in real-time biometric categorisation (e.g., facial recognition) in public spaces will face restrictions.

- Social Scoring: AI systems that use social scoring for evaluating people’s behaviour in ways that could be discriminatory or harmful will be banned.

For non-compliance with prohibited AI practices, organisations can face fines as high as €35 million or 7% of global annual turnover, whichever is higher.

Is your organisation ready?

As organisations adopt AI, it is important to understand the impact of AI on their policies and frameworks, as well as the capabilities and limitations of AI systems, to ensure a smooth and compliant integration.

With less than 12 weeks until the EU AI Act comes into force, there are several important steps that must be taken:

- Define AI within your organisation to align with the regulatory definition.

- Build an AI inventory to identify all AI systems in use across business functions.

- Classify the risk level of each AI system based on the criteria set out in the Act.

- Phase out prohibited AI systems that fall under the “unacceptable risk” category.

With the EU AI Act coming into effect in August 2024, and first deadline for prohibited AI set for February 2025, organisations should be taking steps to ensure compliance.

Contact us

If you need support on any of the steps above, please contact us. We will bring you through the process to ensure a seamless integration of the EU AI Act into your organisation.

Discover more in Artificial Intelligence

4 Results

Nothing found