We help you identify, mitigate, and manage AI-specific risks with cutting-edge security, compliance, and governance services.

Artificial Intelligence (AI) and Large Language Models (LLMs) are rapidly transforming the way organizations operate, innovate, and deliver value. From automating business processes to enhancing customer experiences and unlocking new insights, AI-driven solutions are now at the core of digital transformation strategies across industries.

However, as these technologies become more deeply embedded in critical systems and workflows, they introduce a new generation of security, compliance, and ethical challenges. Traditional security measures—such as penetration testing and code reviews—are no longer sufficient to address the unique risks posed by AI and LLMs. These systems can be vulnerable to sophisticated attacks, data leakage, bias, and regulatory non-compliance, all of which can have significant consequences for your organization’s reputation, operations, and trustworthiness.

Our team is dedicated to helping organizations navigate this evolving landscape with confidence.

Our key services

We employ a cutting-edge methodology specifically designed for the security assessment of Generative AI and Large Language Models. Our approach is grounded in globally recognized frameworks such as the OWASP Top 10 for LLMs and MITRE ATLAS, ensuring that our testing is both comprehensive and up to date with the latest industry standards. Through this process, we uncover a wide range of vulnerabilities, including prompt injections—both direct and indirect—system prompt leakage, exposure of training data, and the risk of jailbreaking or bypassing access controls. We also address threats like misinformation, excessive agency, cost harvesting, and model inference abuse. By simulating real-world attack scenarios, we reveal how common web application flaws can influence AI behavior, helping your organization build systems that are resilient against emerging threats.

From the initial concept to full deployment, we guide your AI and machine learning projects to avoid technical debt and ensure long-term scalability. Our support covers every critical phase, including feature engineering and data selection, model training and transfer learning, and the secure deployment of models using modern MLOps practices. We emphasize the importance of comprehensive documentation, ensuring that your solutions are audit-ready and easily maintainable by third-party developers or internal teams. This holistic approach enables you to deliver AI solutions that are not only innovative but also secure, maintainable, and ready to scale as your business grows.

Navigating the rapidly evolving landscape of AI regulation requires expertise and foresight. We support organizations in operationalizing robust AI governance by conducting regulatory gap analyses aligned with standards such as the EU AI Act, NIST, OECD, and ENISA. Our team assists in the creation of tailored policies and control catalogues, and we clarify roles and responsibilities through the development of RACI matrices. We also provide comprehensive risk assessments and mitigation planning, manage the entire AI model lifecycle, and establish effective incident response protocols. With a focus on KPI and KRI development, monitoring, and transparent reporting, our approach ensures that your AI systems remain compliant, ethical, and transparent at every stage of their lifecycle.

Ensuring fairness and ethical integrity in AI models is a core priority for us. Our methodology is designed to detect and address bias across demographic, cultural, and toxicity dimensions, using advanced techniques such as counterfactual testing and statistical fairness evaluation. We implement a range of mitigation strategies, including data curation, model fine-tuning, and human-in-the-loop moderation, to help your models treat all user groups equitably. As standards and societal expectations continue to evolve, we are committed to refining our bias and fairness testing framework, enabling your organization to build trustworthy AI systems that meet the highest ethical standards.

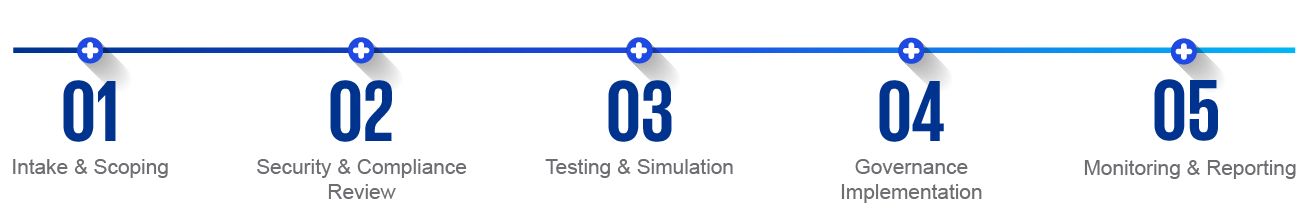

Our process covers the full AI security lifecycle:

Why choose KPMG Cyber Lab?

Expertise That Matters

We bring specialized knowledge in AI security, compliance, and governance—ensuring your solutions are protected against emerging threats and aligned with global standards.

Proven, Standards-Based Methodologies

Our approach is built on trusted frameworks like OWASP, MITRE ATLAS, and the EU AI Act, delivering reliable results and actionable insights.

End-to-End Support

From initial risk assessment to ongoing monitoring, we provide full lifecycle support tailored to your organization’s needs—helping you innovate confidently and responsibly.

Protect your future with KPMG Cyber Lab!