Thanks to data mesh principles, organizations are one step closer to democratizing data and treating data as a strategic asset.

Every day, organizations deal with increasing amounts of data, originating from both inside and outside the organization. Due to centralized data ownership and data access, transparency and timeliness have become bottlenecks in many organizations.

Many companies manage their data like they manage business processes, reports, data models, etc. There might be a central repository of data assets but every business domain uses a very specific subset of those data assets that is relevant to its needs. For instance, we see many finance departments extracting customer data in their own way and interpreting them independently from the sales departments who originally created the data.

Companies invest a lot in this centralized approach. Often there is only one data team in a centrally organized IT infrastructure, which has to manage organization-wide business needs to access, manage, and deliver data to many different stakeholders such as sales, finance, HR, operations, etc. This may lead to disappointment and inefficiencies on both sides, for example:

- The backlog of the data team increases exponentially with the new sources of information and the number of users.

- The business has high expectations of the data team; however, the data team is often not sufficiently aware of the relevant business knowledge, data content, and who uses the data for analytics and decision purposes. This results in several back-and-forth iterations between the data team and the business domains trying to understand the needs of the business while the business expects the highest quality data to be delivered.

The main reason why organizations try to bundle the input of all data sources into one central solution - for example, using a central data warehouse - is to prevent the formation of data silos and to streamline the flow of information.

However, data are often not used to their optimal potential and are mainly used within specific departments by business users who know the context of the data. Business usersstill struggle to manage the data coming from varied sources leading to more requests for the central data team or data engineers. Cross-business domain questions are difficult to answer and reports involving various data sources are not easily developed by business users in a self-service manner.

As an example, account managers often see the value of proactively targeting leads based on a combination of different parameters, such as company size, sector, financial statements, etc. However, their CRM system does not always provide the ability to combine data from different sources to create useful insights. Some requests can be raised to IT and the data team but doing so doesn’t always result in sustainable, user-friendly solutions. Without using organization-wide standardization and governance, this may result in an increased complexity, unused, and invaluable data silos.

Data mesh aims to eliminate these bottlenecks with a new distributed approach that gives data management and ownership to domain-specific business teams. By decentralizing the ownership of the data per domain, companies can create synergies without having to first break all “data silos”. This can be achieved both by using data for specific purposes and by the domain expertise in the data.

Data and business insights from the data can support business outcomes only when meaningful data are made available to every data consumer.Data needs to be usable by every employee. Data have been recognized by businesses as an outcome-driving asset.

Is data mesh a new trend or a view on data architecture that is likely to stay?

Data mesh is a novel approach based on a modern, distributed architecture for BI (Business Intelligence), analytics, and data management. The main objective of data mesh is to eliminate the challenges related to data availability and accessibility at scale but also to data transformation and reusability. Data mesh is about:

- A decentralized strategy that distributes data ownership to domain-specific teams that manage, own, and serve the data as a product.

- Making data accessible, available, discoverable, secure, and interoperable.

- Enabling end-users to easily access and query data where it lives without first transporting it to a data lake or data warehouse.

- Making data consumable by every employee in the organization, not just the data savvy people, data specialists, or IT experts. The value is in getting data into the hands of the relevant data users.

Data mesh allows business users and data scientists to access, analyze data, and operationalize business insights from any data source, in any location, without intervention from expert data teams. The faster access to query data directly translates into faster time-to-value without needing data transportation.

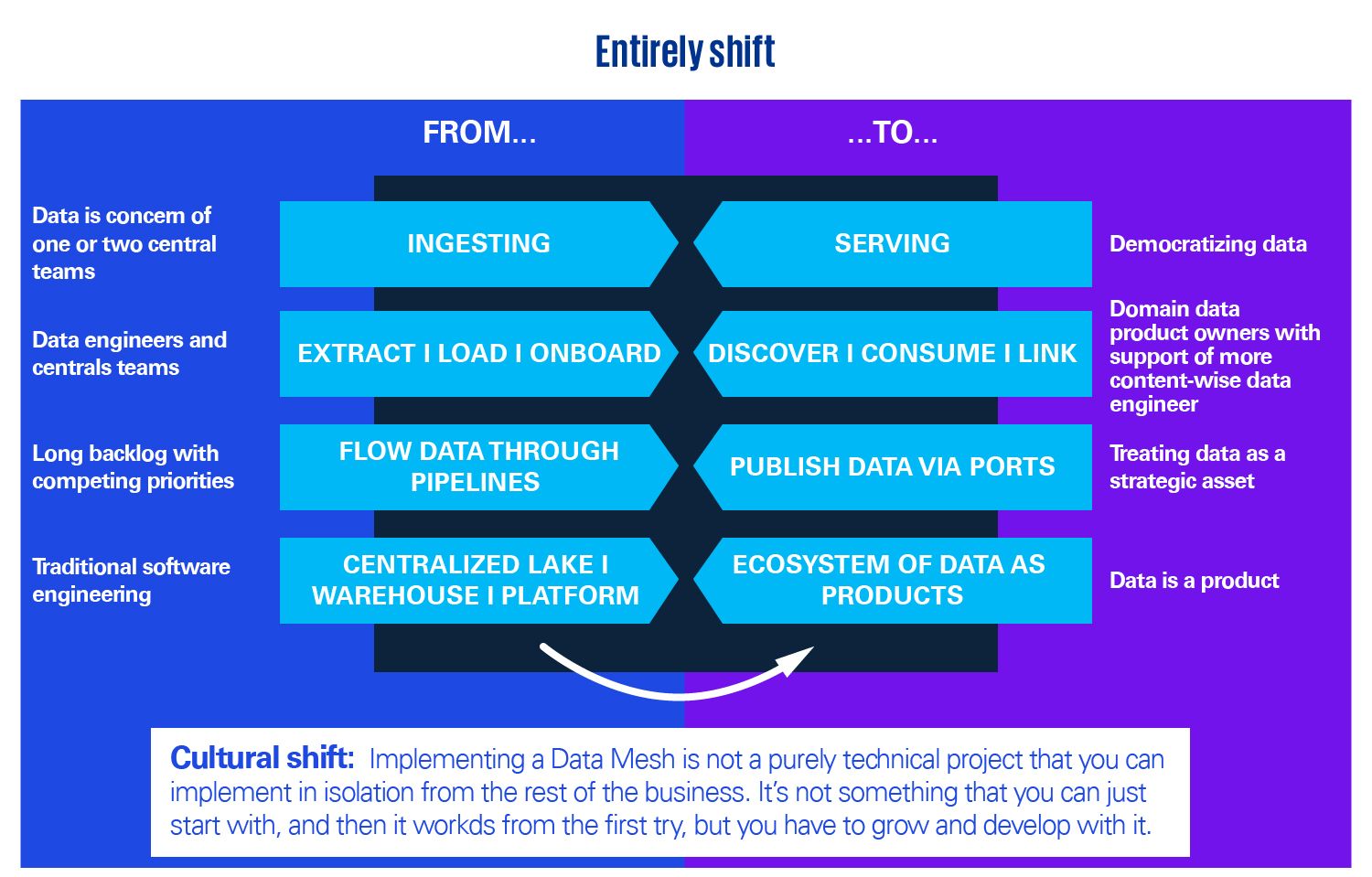

Data mesh is one step closer to democratizing data and enabling the whole organization to treat data as a strategic asset. This shift introduces a new way of working in many aspects.

“This is where soft values and cultural traits are the biggest factors - making sure to treat data as it should be treated. If you really want to become a data-driven company, data cannot only be a concern for one or two central teams.”

Find out the main principles, the opportunities, and KPMG’s view on data mesh in this blogpost to discover if it is a trend that is likely to stay and whether it could be useful for your organization.

Data mesh is a paradigm shift, moving away from traditional monolithic data infrastructures

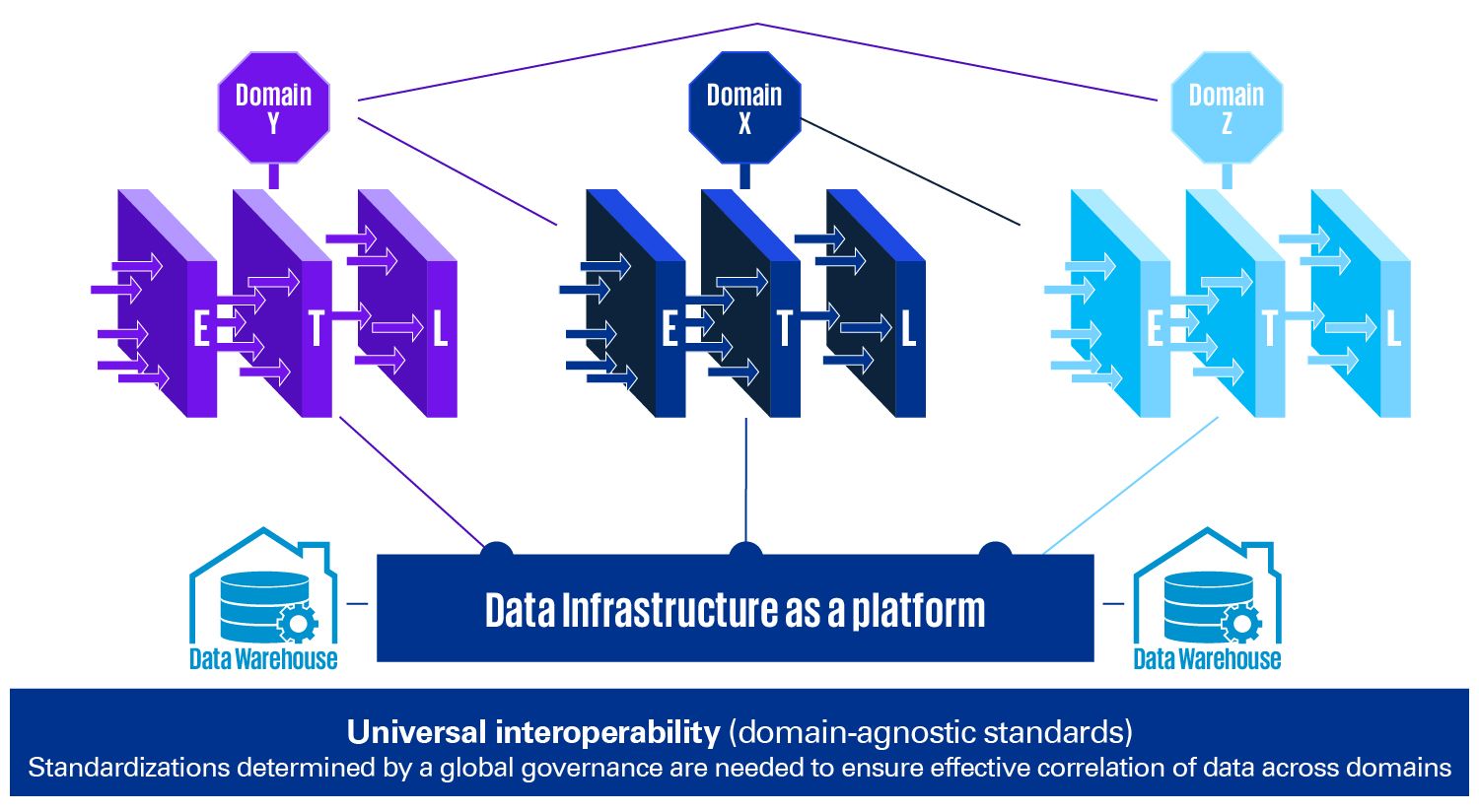

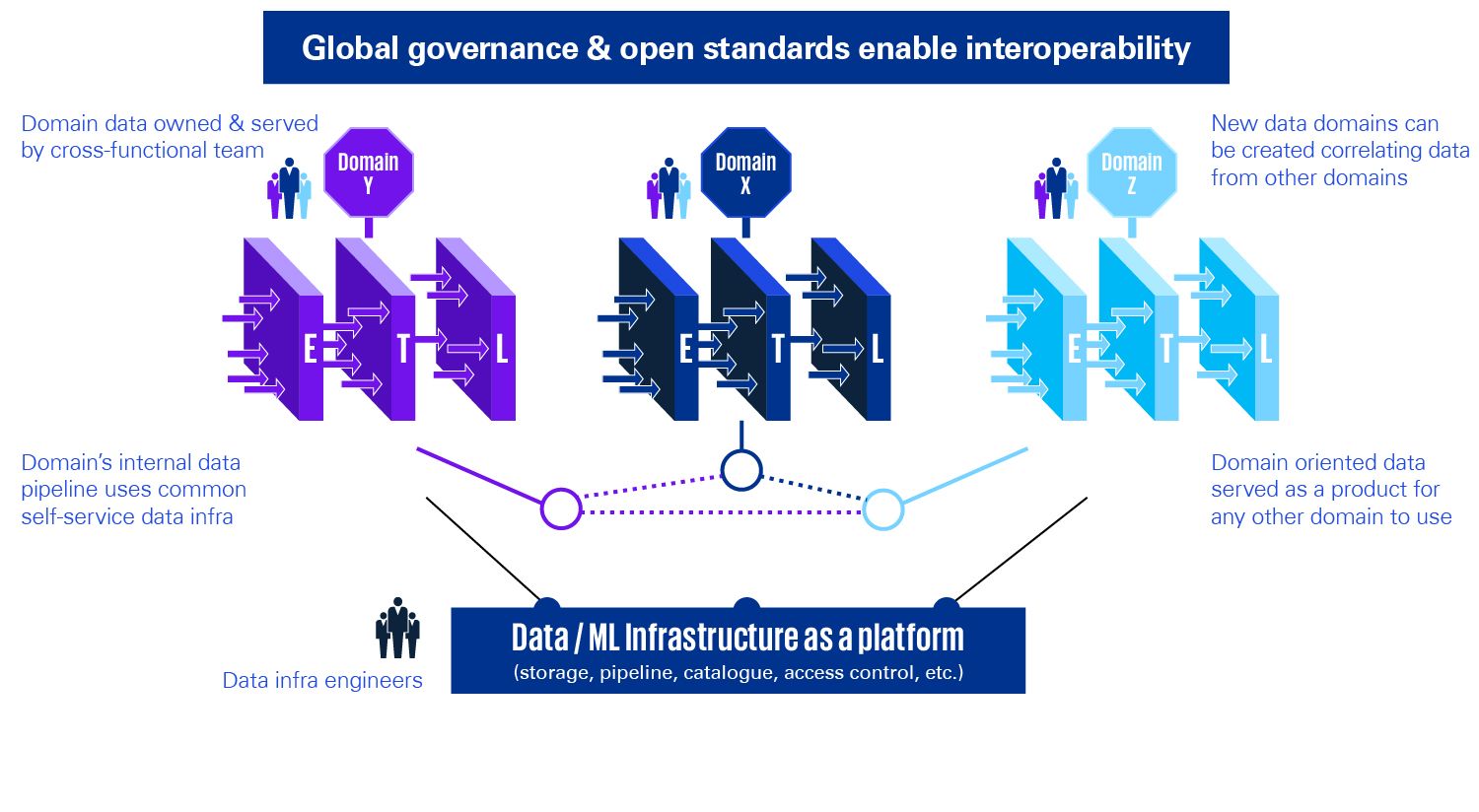

At an elevated level, a data mesh is composed of three separate components: data sources, data infrastructure, and domain-oriented data pipelines managed by functional owners. Underlying the data mesh architecture is a layer of universal interoperability.

The data infrastructure has standards that can be applied across all the domains and is a common, domain-agnostic infrastructure platform that owns and provides the necessary technology that the domains need to capture, process, store, and serve their data products. The data infrastructure makes sure the platform hides all its underlying complexity and provides the data infrastructure components in a self-service manner.

Unlike traditional monolithic data infrastructures that handle the consumption, storage, transformation, and output of data in one central data lake, a data mesh views “data-as-a-product,” with each domain handling their own data pipelines. It avoids large monolithic data architectures and reduces massive operational and storage costs.

A concern of the distributed domain-oriented data ownership architecture is the duplicated effort and skills required to operate it. Connecting these domains and their associated data assets is a universal interoperability layer that applies data governance centrally, with the same data standards and principles. Standardization, determined by global governance, is needed to ensure effective correlation of data across domains. Data mesh connects siloed data to help enterprises move towards automated analytics at scale.

What do our data lake and data warehouse become then?

The data warehouse still has a role in a data mesh architecture, but it becomes just another consumer of data rather than the oracular source of truth.

“Data lake and data warehouse do not disappear they just become nodes in the mesh.”

Data mesh ensures organizations to continue to apply some data lake principles, such as making immutable data available for exploration or analytical use, and data lake tooling for internal implementation of data products or as part of the shared data infrastructure.

Data lake would not be the centerpiece anymore. It is an implementation detail subserving the idea of domain data product as the first-class concern. The same applies to data warehouse in terms of business reporting and visualization.

“It is highly likely that we will not need a data lake, because the distributed logs and storage that hold the original data are available for exploration from different addressable immutable datasets as products. However, in cases where we do need to make changes to the original format of the data for further exploration, such as labeling, a domain might create its own lake or data hub to meet its needs.”

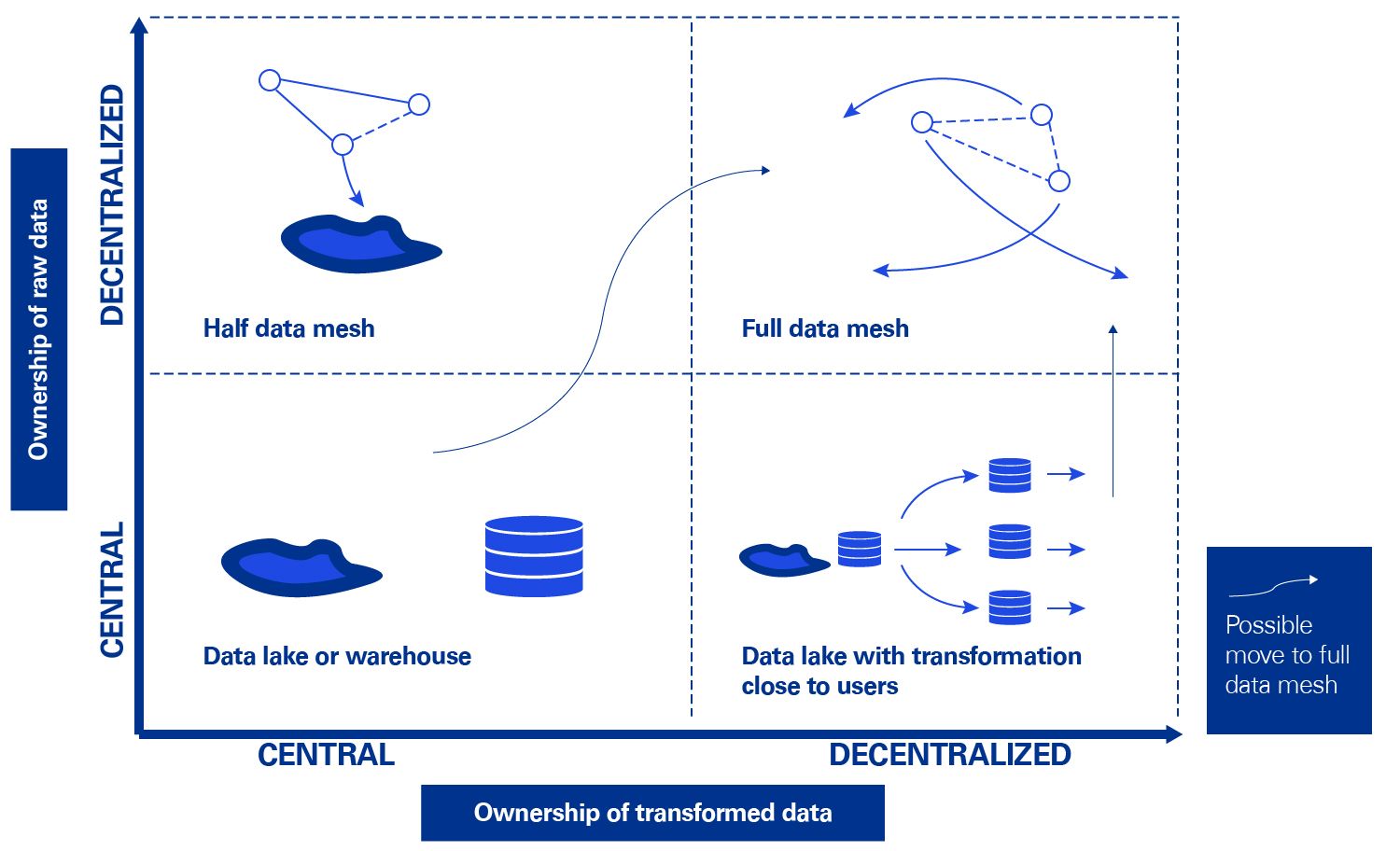

Moving swiftly towards data mesh architecture is not always the best idea. It is not a choice between one or the other. A hybrid approach is more likely to sustain and be successful over time when moving towards a full data mesh. Let us explain this by the matrix.

The key concept of a data mesh is decentralized ownership. As such, a data warehouse is centralized ownership of data.

If we now distinguish between raw and transformed data, we can see four different target data architectures that are possible. We can also see two to three diverse ways of moving from a data lake to a data mesh.

It is possible to move from a central data lake towards combining a data lake with more decentralized sources, and then moving to a full data mesh after some time.

However, a second option is to implement decentralized ownership of transformed data first, close to end-users and enabling them to do more than self-service BI.

The raw data could then be accessed by data-knowledgeable users close to decision-makers, transformed into local Extract Transform Load (ETL) solutions. The raw data could also be pushed into decentralized data warehouses, where someone closer to the user could do basic ETL on that data. Of course, each department could have its own little data team doing just ETL for that department. Afterwards, it could also be worth thinking about the move to a full data mesh.

Summarizing our views on data mesh

Data mesh is not just a new data architecture trend. However, it’s important not to underestimate the power of a universal interoperability layer.

Apply data governance centrally, with the same data standards and principles to ensure effective correlation of data across domains. Data mesh mandates scalable, self-serve observability into your data.

When packaged together, these functionalities and standardizations provide a robust layer of observability. The data mesh paradigm also prescribes having a standardized, scalable way for individual domains and teams to handle and answer questions like:

- Is my data fresh?

- Is my data fit-for-purpose?

- Is my data broken?

- How do I track schema changes?

- What are the upstream and downstream dependencies of my pipelines?

If you can answer these questions, you can rest assured that your data is fully observable — and can be trusted.

A data mesh is not necessarily about a specific type of technology or code that magically solves data problems at the touch of a button. Instead, it is about the human side of technology and getting teams to be able to work independently to maximize the value of data within that organization.

There is no one ideal moment to consider a data mesh architecture but there are some signs that might indicate you can benefit from the approach:

- If you have domain-driven development, started working with Microservices, or if you do a cloud migration.

- You think importing data into the data warehouse is costly and you are dismissing data sources to be imported that are valuable to individual users. Those should be served individually and are perfect candidates for a “carve-out as data mesh node.”

- You are already moving “transformation of data as close to the data-users as possible,” which is usually a sign of a bottleneck in the data -> information -> insight -> decision -> action -> data pipe. This could be considered an intermediate stage.

- If your culture makes it difficult to scale centralized services, data mesh can be a way to scale further by distributing governance responsibilities.

Interested in diving deeper into the topic of data mesh to find out whether it would be a fit for your organization?

Author: Rani Torrekens

Explore

Connect with us

- Find office locations kpmg.findOfficeLocations

- kpmg.emailUs

- Social media @ KPMG kpmg.socialMedia