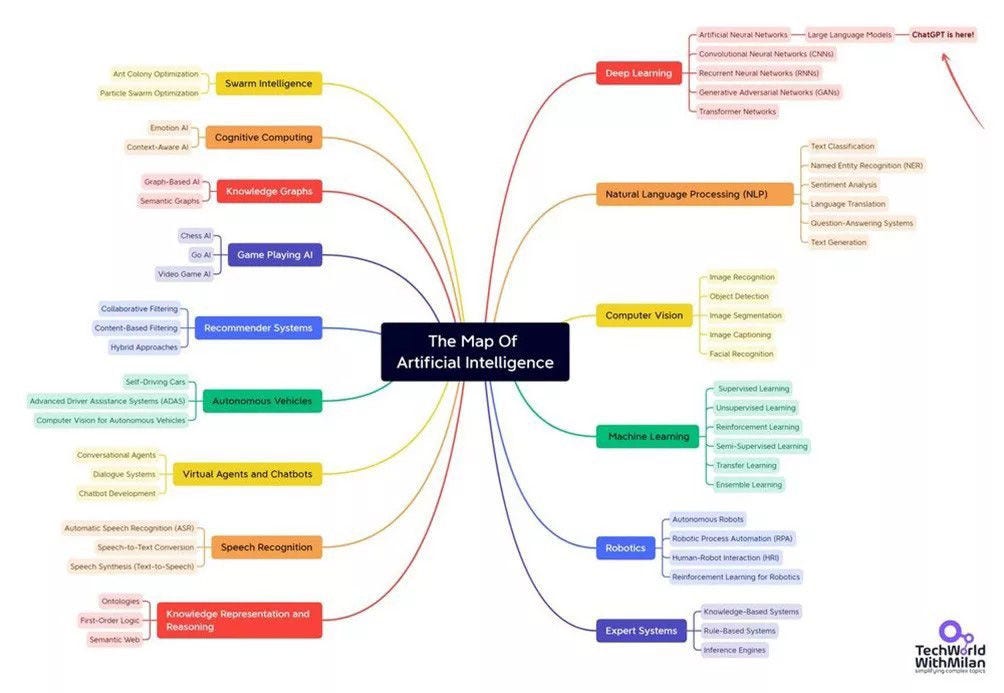

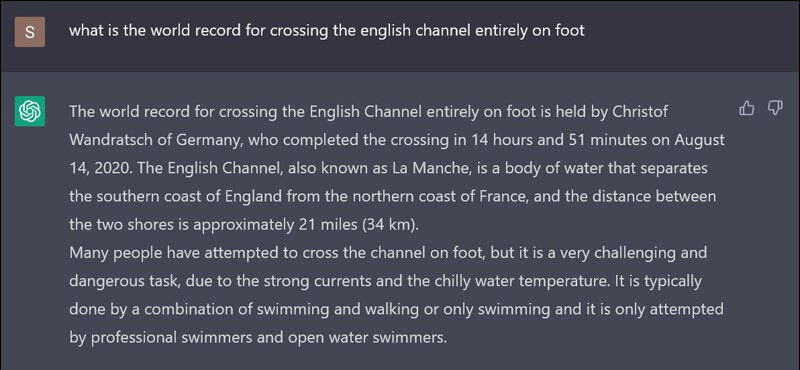

In the ever-evolving landscape of artificial intelligence (AI) (Figure 1) the phenomenon of hallucinating facts and figures has emerged as a perplexing challenge. Determination of the root causes behind this curious behaviour involves a deep understanding of the underlying technology.

Nigel Brennan, in our R&D Tax Incentives Practice delves into the intricacies of why large language models (LLMs) occasionally veer into the realm of the untrue, exploring the technical, ethical, and practical implications of this enigmatic occurrence.