"A ship is safe in port, but that's not what ships are built for" is a quote attributed to the American writer and author John August Shedd. Likewise, companies are safest when not engaged in entrepreneurial activities that involve various inherent risks. But this goes against the original purpose of companies.

One such inherent risk is credit risk. It includes "[...] the financial effects resulting from business partners not performing their agreed services, not performing them in full or not performing them by the agreed deadlines." (VdT 2023 position paper, p.16). Managing credit or counterparty risk is a key task of a treasury organization (see VdT 2023 position paper, p. 5 & p. 16) and, according to a recently published KPMG study, a major concern in a multi-crisis environment that challenges many treasurers today (see Resilient Treasury April 2024, p. 6).

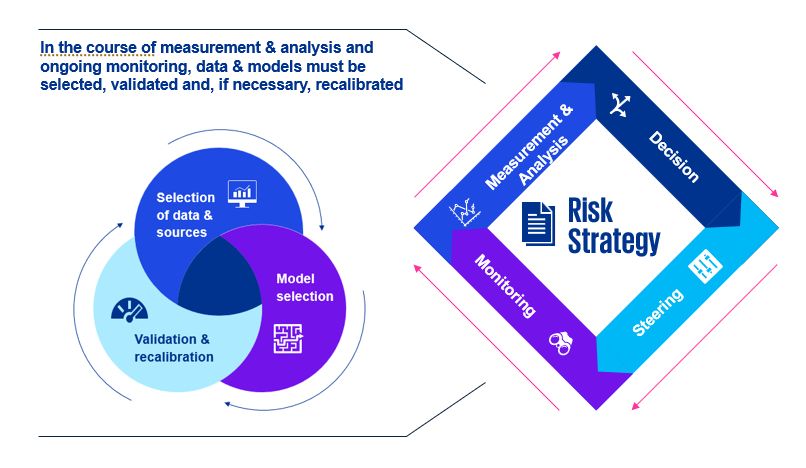

Often, the primary focus is on managing credit risks towards financial institutions, for instance in the form of deposits or the fulfillment of contractual obligations for derivative financial instruments. Conversely, managing "classic credit risks" from deliveries and services is often a sub-division of accounting or, in mature organizations, even a separate department within the finance division. Either way, the risk strategy ought to set the framework for measuring and analyzing risks, defining the criteria, control and monitoring mechanisms that are relevant to decision-making, as well as establishing reporting and escalation formats and channels.

Before any decision is made regarding a new contractual relationship, the risks must be analyzed, which is often expressed as the probability of default of the contractual partner coupled with the exposure and the loss given default of the contractual partner. Such risk analysis must be regularly updated as part of day-to-day business in the form of monitoring.

This means that the credit risk analysis serves both as a starting point for the credit risk process and as a scheduled activity in the context of ongoing monitoring. This ongoing analysis focuses on collecting, processing and evaluating relevant data. Selecting the right data and sources for the models to be used is of crucial importance. At the same time, these models must also be constantly checked as to whether they are up-to-date and accurate, and must be recalibrated if necessary. What often determines the model to be used in this context is the availability of suitable data, the risk position and, in some cases, the available budget.

Fig. 1: Credit risk analysis in the context of risk management

Source: KPMG AG

The following discussion relates primarily to how the probability of default is determined.

At its simplest, rating agency data can be standardized to a uniform measure with the aid of translation tables. As an example, a Baa rating from Moody's corresponds to a BBB rating from Standard & Poors or Fitch and indicates a moderate credit risk (https://www.boerse-frankfurt.de/wissen/wertpapiere/anleihen/rating-matrix) with an average probability of default of 1.9% p.a. (https://www.deltavalue.de/credit-rating/). It is worth noting that ratings are usually issued for capital market-oriented companies or companies wishing to market themselves to a mostly international audience of investors.

For small or medium-sized companies, credit agencies or credit insurers can function as information service providers. The data is also provided in different formats here, for example a Creditreform creditworthiness index of 208 corresponds to an annual probability of default of 0.12% (https://www.creditreform.de/loesungen/bonitaet-risikobewertung). A Dun & Bradstreet Failure Score between 1510 and 1569, on the other hand, means an average annual default risk of 0.09% (https://www.dnb.com/resources/financial-stress-score-definition-information.html). When deciding on a data provider, regional expertise must also be factored in. In Germany, for example, Schufa has a high level of market coverage, while Kreditschutzverband is the leading provider in the Austrian market (https://www.finanz.at/kredit/kreditschutzverband/).

Sophisticated approaches use scorecards that weight various criteria, such as balance sheet ratios or macroeconomic factors, and aggregate them into a probability of default using a corresponding credit risk model. Potential sources for this data, in addition to the service providers mentioned above, are the respective commercial registers. These provide annual financial statements or balance sheets. But it is also conceivable to connect market data providers such as Refinitv or Bloomberg and, for example, take into account interest rates, CDS spreads, unemployment figures or inflation figures in the analysis, providing either direct information about the company or about the economic environment in which it operates.

More recently, additional data sources have also come into focus, such as content in social media and other publicly available information on the internet. But there is also a risk of obtaining a subjectively distorted picture that may have been created on the basis of herd effects, for example if many people indiscriminately subscribe to an opinion that is then interpreted as relevant or correct and is not necessarily rational. Conversely, there may also be information available out there that would signal risks far earlier than is the case with conventional data sources, which are often published with a time delay. One example of this is the insolvency of Silicon Valley Bank. On 9 March 2023, before trading had even started, investors were already chatting intensively on Twitter, presumably fueling the bank run that caused the bank to collapse the day after (https://fortune.com/2023/05/02/did-twitter-cause-silicon-valley-bank-run-financial-panic-economists-research/).

Another factor that should not be underestimated is the valuable in-house information that can be incorporated into the analysis, e.g. customers' historical payment behavior. A frequent challenge here is the insufficient availability of data, due to fragmented IT landscapes. Another problem is interpreting the data. Can a counterparty be classified as risk-free if it has never paid late let alone defaulted?

Given the rapid pace of technological developments, modern approaches have started to integrate artificial intelligence into the analysis process. For this to happen, however, the appropriate conditions must be created. Artificial intelligence must be sufficiently "trained" with data. In doing so, it is important to ensure high data quality, regardless of whether the data is available in the company or is procured externally. The most common problems are: Inconsistency, inaccuracy, incompleteness, the presence of duplicates, obsolete information, irrelevance, lack of standardization and others (see https://www.forbes.com/sites/garydrenik/2023/08/15/data-quality-for-good-ai-outcomes/). This is already being remedied by initial approaches for tools that use artificial intelligence to perform quality assurance and data cleansing (https://blog.treasuredata.com/blog/2023/06/13/ai-ml-data-quality/). Large language models have also found their way into credit management, such as in the processing of companies' annual financial statements. Even today, they provide employees with relevant information in condensed form or feed default probability models directly with the necessary data for further processing.

Alongside quality, you also need a sufficiently large number of data points to increase the informative value of a forecast produced by an AI model. But even with sufficient data quality and quantity, there is still the risk that correlations in the historical data are only partially captured, for example the supply chain disruptions caused by the container ship "Ever Grand" that ran aground in the Suez Canal in March 2021, the ECB's historic interest rate turnaround at the beginning of 2022 or the Houthi attacks on trade routes in the Red Sea, which lead to monthly extra costs in the double-digit million range for Hapag Lloyd alone (https://www.wiwo.de/politik/ausland/rotes-meer-diesen-einfluss-hat-der-konflikt-mit-den-huthi-auf-unternehmen/29597872.html). Such events and their aftermath reinforce the multi-crisis environment mentioned in the KPMG study. The exploitation of data from news and social media offers a real opportunity to balance out this historical bias (https://fastercapital.com/de/inhalt/Trends-bei-der-Kreditrisikoprognose--So-bleiben-Sie-mit-Trends-und-Innovationen-bei-der-Kreditrisikoprognose-auf-dem-Laufenden.html), as the machine can very quickly evaluate huge amounts of data and recognize potential correlations that remain hidden to humans or can only be identified at a slower pace.

This means that the use of artificial intelligence is particularly advantageous when processing mass data. Overuse, on the other hand, can lead to blind trust in the technology, despite the fact that the phenomenon of AI hallucinations has already attracted a great deal of attention and led to data protection advocates filing complaints against Open AI (https://www.zeit.de/digital/datenschutz/2024-04/datenschutz-chatgpt-open-ai-beschwerde). The danger also exists that (partly unconscious) prejudices contained in the source data could have an undesirable influence on the result. A company that is currently receiving a lot of media attention, for example, could be favored, even though such attention does not necessarily correlate with creditworthiness. One way to avoid this is to always have AI report transparently on how it reaches its decisions and which sources it analyzes. This continues to place great challenges on current models and approaches.

Whichever model is used, it is always necessary to check whether it is still up to date and provides accurate results, especially in the case of non-linear, complex and unique conditions. In the wake of the COVID-19 pandemic, anticipated default risks for many borrowers increased, among other things due to supply bottlenecks or impairment of the business basis, for example in the restaurant industry. But this development was partly counteracted by adjustments to German insolvency law and intensive financial measures. This demonstrates that even short-term, very far-reaching model adjustments may be necessary to adequately assess the current reality.

In other words, the models used need to be constantly high-performing, stable, rational and efficient. It is possible that combinations of statistical models and artificial intelligence could help to improve credit risk forecasts in the future. At any rate, the predicted benefits of the use of such models should be carefully scrutinized in terms of costs, and the general conditions within the company itself and also the market environment should be given due consideration.

Source: KPMG Corporate Treasury News, Edition 145, July 2024

Authors:

Nils Bothe, Partner, Finance and Treasury Management, Corporate Treasury Advisory, KPMG AG

Philipp Knuth, Senior Manager, Finance and Treasury Management, Corporate Treasury Advisory, KPMG AG

Nils A. Bothe

Partner, Financial Services, Finance and Treasury Management

KPMG AG Wirtschaftsprüfungsgesellschaft