Financial services firms are embracing artificial intelligence and emerging technologies like never before. But are they ready to manage the risks?

Ask any financial services CEO if their organization is using or piloting artificial intelligence (AI) and you’re sure to get a positive response. In fact, in a recent global survey of financial services CEOs, just 1 percent admitted they had not yet implemented any AI in their organization at all.

Not surprisingly, financial services firms are becoming increasingly aware of the significant benefits that AI can deliver — from improving the customer experience and organizational productivity through to enhancing data governance and analytics. And they are beginning to realize how AI, machine learning and cognitive capabilities could enable the development of new products and new demand that would not have been possible using traditional technologies. Our survey shows that the majority are now implementing AI into a wide range of business processes.

While this is great news for financial services firms and their customers, the widespread adoption of AI across the organization also creates massive headaches and challenges for those charged with managing risk.

New risk challenges emerge

Part of the problem is the technology itself. By replicating a single mistake at a massive scale, a ‘rogue’ AI or algorithm has the potential to magnify small issues very quickly. AI is also capable of learning on its own, which means that the permutations of individual risks can be hard to predict. Whereas a human rogue employee is limited by capacity and access, an AI can feed bad data or decisions into multiple processes at lightning speed. And that can be hard to catch and control.

The ‘democratization’ of AI is also creating challenges for risk managers. The reality is that, with today’s technologies, almost anyone can design and deploy a bot. As business units start to see the value of AI within their processes, the number of bots operating in the organization is proliferating quickly. Few financial services firms truly know how many bots are operating across the enterprise and that means they can’t fully understand and assess the risks.

All of this would be fine if risk managers were positioned to help organizations identify, control and manage the risks. But our experience suggests this is rarely the case. In part, this is because few risk managers have the right capabilities or understanding of the underlying algorithms to properly assess where the risks lie and how they can be managed. But the bigger problem is that risk management is — all too often — only brought into the equation once the bot has been developed. And that is far too late for them to ‘get up to speed’ on the technologies and provide valuable input that can help implement effective controls from the outset.

It’s not just financial services decisionmakers and risk managers that are struggling with these challenges. So, too, are regulators, boards and investors. They are starting to ask difficult questions of the business. And they are not confident about the answers they are receiving.

Getting on the right path

There are five things that financial services organizations could be doing to improve their control and governance over AI.

- Put your arms around your bots. The first step to understanding and managing AI is knowing where it currently resides, what value it currently delivers and how it fits into the corporate strategy. It’s also worth taking the time to understand who developed the algorithm (was it an external vendor?) and who currently owns the AI. Look at the entire organizational ecosystem — including suppliers, data providers and cloud service providers.

- Build AI thinking into your risk function. We have helped a number of banks and insurers identify and assess the capabilities and skills needed to create an effective risk function for an AI-enabled organization. It’s not just about risk managers having the right skills. It’s also about becoming more agile, technologically savvy and commercially focused. Particular attention should be placed on the development of sustainable learning programs that include the theory, practical and contextual capabilities required to encourage continuous learning.

- Invest in data: Data is a fundamental building block for getting value from new and emerging technologies like AI. And our experience suggests that most financial institutions will need to continue to invest heavily into ensuring their data is reliable, accessible and secure. This is not just about feeding the right data into the machine; it is also about helping to mitigate operational risks and potential biases by verifying the quality and integrity of the data the organization is using.

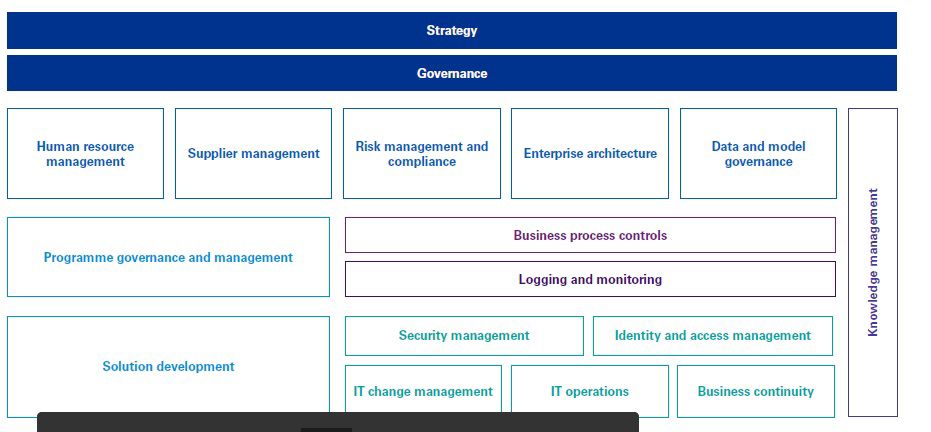

- Develop an AI-ready risk and control framework. While some internal audit functions and risk managers are using existing frameworks such as SR 11-7 and the OCCs Risk Management Principles as a starting point, we believe that AI professionals, risk managers and boards will need to develop a purpose-built risk and control framework (PDF 775 KB) (figure 1) that can help mitigate data privacy, security and regulatory risks across the entire lifecycle of the model. For more details on KPMG's Risk and Controls framework, click AI Risk and Controls Matrix (PDF 775 KB).

- Go beyond the technology. The reality is that AI — once fully realized — will likely extend across the entire culture of a financial services firm. And that will require decision-makers to think critically about how they ensure they have the right skills, capabilities and culture to encourage employees to properly operate, manage and control the AI they work with. More than just new technology skills, organizations will need to consider how they transform the organizational mind-set to apply a risk lens to AI development and management.

Figure 1: Risk and Control Framework

Looking ahead

While there are still significant unknowns about the future evolution of AI and its associated risks, there are a few things that we know for sure: financial services firms will continue to develop and deploy AI across the organization; new risks and compliance issues will continue to emerge; and risk management and business functions will face continued pressure to ensure that the AI and associated risks are being properly managed.

The reality is that — given the rapid pace of change in the markets — financial institutions will need to be able to make faster decisions that enable the organizations to move from ideation to revenue with speed. And that means they will need to greatly improve the processes they use to evaluate, select, invest and deploy emerging technologies. Those that get it right can look forward to competitive differentiation, market growth and increased brand value. Those that delay or take the wrong path may find themselves left behind.

5 questions financial services boards and lines of business should be asking about AI

- How does the organization's use of AI align to the organizational strategy and how does it maximize value impact of strategic outcomes?

- What new risk and compliance issues is AI introducing into the organization and how does that impact our organizational risk profile?

- How are we leveraging external experts and encouraging our existing workforce to learn the AI and technology skills that the organization requires to properly manage new technologies?

- Do the organization's risk professionals understand the emerging technologies and their associated operational, compliance and regulatory risks?

- Is risk management properly embedded into the AI design and development process to ensure risks are identified and managed early?

Connect with us

- Find office locations kpmg.findOfficeLocations

- kpmg.emailUs

- Social media @ KPMG kpmg.socialMedia